Health systems worldwide are under continuous pressure. Aging populations, increasing chronic disease, and a perpetual shortage of clinicians have pushed many organizations to the limits of their capacity, with burnout as a predictable consequence. On top of this, there's the heavy, additional layer of administrative work: the Canadian Medical Association reports that 60% of physicians link paperwork to declining mental health, and collectively, doctors spend 18.5 million hours each year on tasks that add little value. Yet another survey found that 87% of healthcare workers stayed late to finish documentation, time that could be spent with patients or on professional development. The result is increased errors, longer wait times, and declining clinician satisfaction. It is against that backdrop that agentic AI in healthcare is emerging as a practical way to alleviate some of that pressure and restore time for care.

Agentic AI uses several small agents working together rather than a single standalone chatbot. Each agent handles a specific part of the work, and a human still makes the final calls. In healthcare, this setup can cover many steps in a workflow. It can draft clinical notes, book follow-up visits, prepare prior authorization requests, monitor patients at home, and offer options to clinicians when they need to decide what to do next.

We’ve already discussed AI agents in healthcare. Yet, in this article, we delve deeper into what agentic AI is, how it differs from generative AI, where it delivers value to providers, and what is required to implement it safely and responsibly.

What Is Agentic AI for Healthcare Providers, and How Does It Differ From Generative AI?

Most people are already familiar with generative AI tools like ChatGPT, which now sit at the center of many healthcare technology trends and produce text, images, or code when prompted. In healthcare, they can answer questions or draft a discharge summary, but they respond only on demand and do not independently plan or carry out multi-step work.

Agentic systems add a layer that generative models lack. A central coordinator breaks a task into smaller parts and sends each part to a specialized agent or tool. These agents work with narrow permissions and handle targeted jobs, for example, pulling lab values, summarizing an imaging report, or checking recent literature, then send the output back to the coordinator. The orchestration layer manages planning, guardrails, retries, dependencies, and permissions so that no agent operates independently or outside validated boundaries.

The design borrows from how teams function in real organizations. Each agent acts like a team member with a defined responsibility, and the coordinator behaves like a manager, gathering the necessary information and directing the next step. Current agentic frameworks also include features such as memory, reasoning, and orchestration. Short-term memory keeps the immediate context of a case, and long-term memory stores information that accumulates across different encounters. Reasoning components help break a complex task into smaller units of work.

Core capabilities: perception, reasoning, memory, and action

To function effectively in healthcare, agents must exhibit four core capabilities:

- Perception. Agents ingest and understand diverse data types, including structured electronic health records (EHRs), unstructured clinical notes, medical images, sensor readings, and genomic sequences. Multimodal models translate these inputs into a unified representation.

- Reasoning. Agents apply domain knowledge and statistical algorithms to interpret data, generate differential diagnoses, predict deterioration, and recommend interventions. Reasoning may involve large language models (LLMs), knowledge graphs, or explicit rules.

- Memory. They retain important details from earlier interactions so they don’t repeat steps and can adjust responses based on the patient’s history. Long-term memory supports ongoing care across multiple encounters.

- Action. They complete practical tasks such as drafting notes, preparing test orders within set limits, sending reminders, or updating records. Their permissions stay narrow to maintain privacy and safety.

Agentic AI versus traditional AI

Traditional healthcare AI usually handles one task at a time, such as reading an image or estimating readmission risk. It waits for someone to trigger it and does nothing beyond that single step.

Agentic AI works differently. It can plan and run a sequence of tasks across different data sources and systems, rather than relying on a clinician to stitch everything together. The system manages its own workflow but stays within the limits set for it.

Human oversight remains essential. Clinicians define the goal, review the results, and step in when needed, so responsibility for care stays with the care team.

Architectural Foundations: Multimodal Data, Orchestration, and Compliance

Orchestrating specialized healthcare agents

A practical agentic system utilizes a coordinating agent, along with multiple task-specific agents (e.g., documentation, coding support, appointments, benefits/prior authorization, and remote monitoring triage). The coordinator maintains state, routes work, resolves conflicts, and escalates issues to the relevant personnel. This pattern enables the easy introduction of new agents without requiring a redesign of the entire system, and it limits each agent’s data access to only what it needs.

Multimodal data fusion and interoperability

Care is inherently multimodal, encompassing narrative notes, laboratory results, images, vital signs, devices, and claims data. Agentic systems should normalize and fuse these inputs so that downstream agents can reason across them. Interoperability standards, such as HL7 and FHIR for APIs, DICOM for images, and LOINC, SNOMED CT, and ICD-10 for semantics, remain essential so that agents can integrate with the EHR, PACS, LIS, and care management tools, rather than forming yet another silo.

Compliance, privacy, and human-in-the-loop mechanisms

Privacy and trust are central. The 2025 watch list highlights security incidents in hospitals and notes that only 21% of surveyed Canadian physicians felt confident in AI and patient confidentiality. It also details jurisdictional privacy regimes and emphasizes the importance of transparent disclosure, auditability, and data governance.

Practical guardrails typically include:

- Least-privilege access and encryption at rest and in transit.

- Explicit consent flows and data-minimization patterns (for example, use codes, aggregates, or derived indicators when possible).

- Regional data residency for protected health information (PHI), keeping all PHI processing in-region or within private environments operating under signed BAAs or DPIAs.

- Human checkpoints for diagnostic and treatment-related outputs, with clinician attestation. Outputs should automatically route for review when confidence thresholds are low or when the data falls outside the model’s known or validated range.

- Tamper-evident audit trails that record which agent performed which action, with what inputs, and the reasons behind it. Logs should be immutable or stored in systems that ensure integrity so every action can be fully audited.

- Cost and loop controls at the orchestration layer, including timeouts, concurrency limits, and per-task cost tracking to avoid runaway execution or unexpected API spend.

- Ongoing monitoring for drift, bias, latency spikes, and failure recovery. Any model or prompt changes should pass defined safety checks and regression tests before rollout in clinical environments, especially where outputs can influence care.

High-Impact Agentic AI Use Cases in Healthcare in 2026

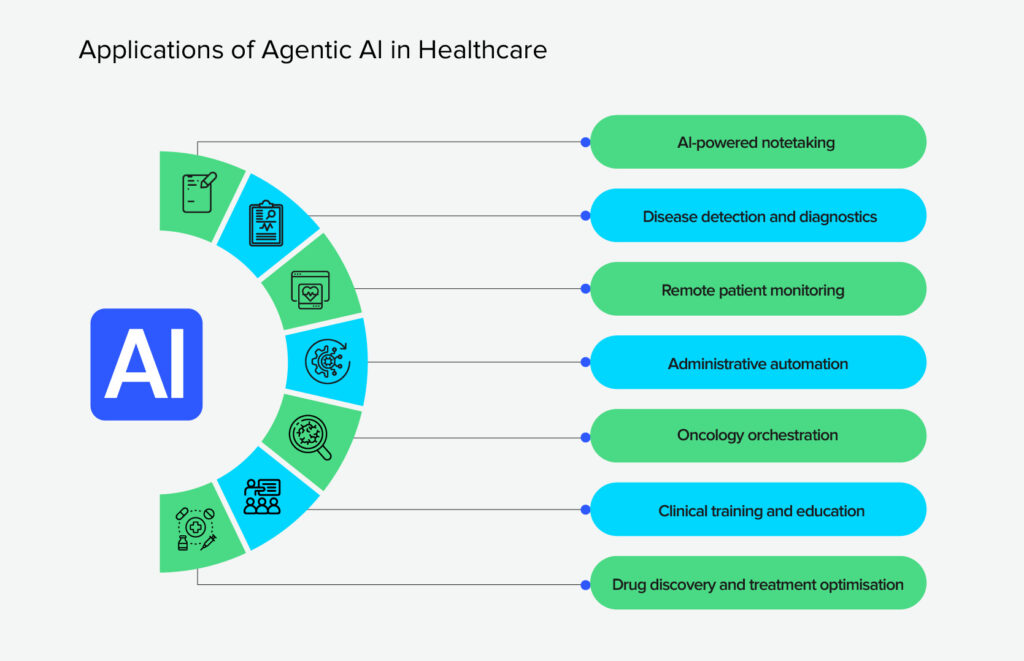

Agents encompass a broad spectrum of AI applications in healthcare, ranging from administrative tasks to complex diagnostics. Let’s see some of the most promising ones:

AI notetaking

One of the clearest early wins for agentic AI is in notetaking — the tools that handle documentation during a visit. Documentation consumes a significant amount of clinician time, often after hours. Research shows 60% of physicians tie paperwork to declining mental health, and Canadian doctors collectively lose about 18.5 million hours each year to tasks suitable for automation. Human scribes help, but they’re expensive, hard to train, and not something you can scale across a whole health system. Older digital scribes automate parts of the job, but they often miss context and still leave much of the editing to the clinician.

Agentic AI scribes take a bigger step forward. They use speech recognition and language models to listen to the conversation, understand who’s saying what, and turn that into a clean, structured note that fits directly into the EHR. They can also follow clinical logic better — pulling out symptoms, timelines, meds, and plans instead of dumping everything verbatim.

A pilot program at the Permanente Medical Group showed what most teams hope for: less after-hours charting, better-quality notes, and physicians who actually felt more present with patients. It’s one of the few AI use cases that gives time back without disrupting how clinicians practice.

However, risks remain. AI scribes may generate hallucinations, documenting events that did not occur, or omit critical details, requiring clinicians to review notes carefully. They may struggle with multiple languages or technical jargon. Experts recommend robust error checking, multilingual support, and mandatory human review to ensure accuracy.

Disease detection and diagnostics

Early detection is one of the biggest levers there is in medicine, but it’s also one of the hardest things to get right. Clinicians have to pull together clues from scans, labs, notes, and history.

By August 2024, the FDA had cleared around 950 AI-powered medical devices, and most of them are aimed at exactly this problem: helping spot disease earlier. Radiology has moved fastest. AI now supports reading mammograms, ultrasounds, and CT scans, adding another layer of pattern-checking that doesn’t get tired or distracted.

A couple of examples make it concrete. ASIST-TBI scans emergency department (ED) CTs and flags possible traumatic brain injuries with more than 80% accuracy. LumeNeuro uses polarimetric imaging to highlight retinal changes that may be linked to Alzheimer’s disease. These systems don’t diagnose on their own, but they do pick up subtle patterns humans might not notice under pressure. That extra signal often means clinicians can move toward the proper intervention sooner.

AI remote patient monitoring (RPM)

Remote monitoring uses sensors and wearables to collect patient data at home and employs AI to analyze and interpret it. According to the 2025 Watch List, AI algorithms process real‑time heart rate, respiration, blood pressure, and activity data, generate alerts, and predict adverse events. AlayaCare’s machine‑learning platform improved event predictions by 11% and reduced overdiagnoses by 54%. At the same time, a Canadian clinical trial found that the platform reduced emergency department visits by 68% and hospitalizations by 35% for patients with COPD or heart failure. The average cost of emergency visits decreased from $243 to $67, and hospitalization costs dropped from $3,842 to $1,399 in three months.

Remote monitoring expands access to care, especially for rural and underserved populations, by minimizing the need for in‑person visits. It helps clinicians allocate resources more efficiently and adjust treatment plans dynamically. If you want to explore how remote-monitoring tools connect with virtual visits, you can check telemedicine app development for practical examples.

Administrative automation

Administrative tasks are ripe for automation. Voice agents and chatbots can schedule appointments, send reminders, and answer patient questions in natural language. TechTarget reports that these agents reduce no-show rates by enabling two-way conversations and assisting with prior authorizations, saving clinicians considerable time. AI agents also draft clinical notes using voice recognition, easing the documentation burden, and gather post‑visit feedback to predict complications and support patient engagement.

Other administrative use cases include claims processing, coding, and denial management. By eliminating manual data entry, hospitals can expedite reimbursement, minimize errors, and free up staff for higher-value tasks. Implementing agentic AI in healthcare for these functions typically yields a quick ROI and is a relatively low-risk entry point compared to diagnostic or treatment-planning applications.

Oncology orchestration

Modern cancer care is a data puzzle: imaging, pathology, genomics, treatment history, you name it. No single clinician has the time to stitch all of that together for every patient manually. That’s where agentic systems start to make sense.

A good example is GE HealthCare’s virtual tumor board. Behind the scenes, you have separate agents doing different jobs: one analyzes scans, another interprets genomic variants, and another looks at biomarkers or prior treatments. A coordinating agent pulls all that together into a coherent view and drafts a possible treatment approach.

Nothing gets auto-approved; the clinical team still discusses and signs off, but the heavy data lifting is done in advance. In practice, it shortens the time between diagnosis and treatment and supports more personalized plans. It also helps clinicians spot things like eligibility for targeted therapies or open clinical trials that might otherwise be missed.

Clinical training and education

Some of the most practical uses of AI today are happening in training. Intelligent tutors can adapt to how a student learns, point out misunderstandings, and adjust the difficulty of questions on the fly, which is far more useful than generic quiz banks.

Simulation systems have also gotten smarter. AI can generate dynamic patient scenarios where vitals change based on the trainee’s decisions. You can run through rare emergencies, communication challenges, or complex cases without putting anyone at risk.

Drug discovery and treatment optimization

In pharma, agentic AI is basically a way to stop guessing in the dark. It helps to narrow the search: which molecules are likely to bind, which ones look toxic, which combinations are worth putting into a wet lab. Agents can chain these steps together, generate candidates, score them, check safety signals, and hand a ranked list to scientists.

The same idea applies downstream. AI helps simulate how a drug might behave in different populations, suggest smarter trial designs, or flag subgroups that respond differently. None of this replaces clinical research, but it cuts down the number of dead ends. In practice, that means fewer wasted cycles and a better chance of getting useful treatments to patients before the next health crisis hits.

Implementation Roadmap: From Pilot to Enterprise Scale

Deploying agentic AI requires organizational change, technical integration, and careful evaluation. Here’s a step-by-step instruction on how to do it:

- Identify high‑value use cases. Start with tasks that yield clear benefits, such as appointment scheduling, documentation, or remote monitoring. Assess where automation can save time, reduce errors, and improve patient satisfaction. Use metrics like reduced no‑show rates, time saved per note, and cost savings to justify investment.

- Engage stakeholders early. Involve clinicians, administrators, IT, and compliance teams from the outset. Co‑design workflows, define success criteria, and ensure that AI systems augment rather than replace human expertise. Address concerns about job displacement by emphasizing that AI frees clinicians to focus on high‑touch tasks.

- Select a robust framework and vendor. Evaluate agentic AI platforms for features like memory, reasoning, orchestration, and integration with HL7/FHIR. Select vendors with proven healthcare experience, transparent explainability tools, and relevant security certifications. And, finally, align platform selection with organizational goals and risk tolerance.

- Pilot and iterate. Launch pilot projects in controlled settings, monitor outcomes, and refine prompts and workflows to optimize performance. For example, start with AI scribes in a single clinic or remote monitoring for a subset of patients with heart failure. Collect data on workload reduction, diagnostic accuracy, and patient satisfaction.

- Ensure compliance and governance. Implement privacy by design, encryption, role‑based access, and audit logs. Establish clear policies for consent, data use, and model updates. Establish cross-functional governance boards to oversee AI usage and manage incidents effectively. Provide ongoing training for clinicians and staff on how to use agentic AI responsibly and effectively.

- Scale responsibly. Once pilots demonstrate value, expand to other departments or sites. Use continuous monitoring and quality improvement to detect biases, errors, or performance degradation. Integrate AI outputs into existing EHR workflows to avoid creating parallel systems. Measure return on investment through metrics such as improved throughput, reduced readmissions, and clinician satisfaction.

Challenges and Ethical Considerations

While the potential of agentic AI use cases in healthcare is immense, several challenges must be addressed:

- Data quality and bias. AI systems are only as good as the data on which they are trained. Biases in training data can lead to inequitable outcomes, such as misdiagnosis for underrepresented populations or inaccurate pulse oximetry readings. Data standards and continual monitoring are necessary to detect and mitigate bias.

- Privacy and security. Cyberattacks on hospitals and low clinician confidence in AI confidentiality necessitate robust encryption, adequate access controls, and transparent policies. Patient-facing disclosures should clearly explain where AI is used and how a human can review or override any AI decisions.

- Clinical trust and adoption. Clinicians must trust AI recommendations to utilize them effectively. Explainability, transparency, and robust evaluation are essential. AI should augment clinicians’ expertise, not undermine their autonomy.

- Cost and sustainability. Developing and maintaining agentic AI requires significant investment in infrastructure, model training, and integration. Gartner warns that many projects fail due to unclear value or rising costs. Healthcare organizations must carefully prioritize use cases and plan for long‑term sustainability.

Conclusion

Agentic AI is different from the single-task tools most teams are familiar with. Instead of waiting for a prompt and doing a single narrow task, these systems can take in data, reason about it, maintain context, and execute predefined actions while clinicians remain in charge. In practice, that can mean less time on clerical work, more reliable follow-up, earlier risk signals from home, and decisions based on a clearer view of the patient.

These principles align closely with Svitla Systems’ approach to responsible AI delivery. Through our Data Audit, Bright AI Sessions, and PoC/MVP development programs, we help healthcare organizations evaluate opportunities, build secure agentic workflows, and scale solutions that improve care without compromising safety or compliance.

The closest wins are also the most practical. AI scribes can give clinicians back hours each week and make notes easier to read. Administrative agents can move routine scheduling and prior authorization off the clinician’s plate. Remote monitoring pipelines can scan device and sensor data, surface patients who need attention, and flag early signs of deterioration.

Whether this works in a real health system comes down to basics, not flashy models. You need use cases that match clinical priorities, integration through standards, privacy and security built in from day one, good logging of what agents do, and clear communication with clinicians so they always know what the system changed and why.

If you want to bring agentic AI into your organization, Svitla Systems can help you sort out use cases, design a realistic architecture, and connect new agents to your existing tools and workflows. Reach out to our team to discuss your idea.

![[Blog cover] Agentic AI vs traditonal automation](https://svitla.com/wp-content/uploads/2025/09/Blog-cover-Agentic-AI-vs-traditonal-automation-560x310.jpg)