Over the past few years, the concept of artificial intelligence agents has garnered substantial attention in core initiatives spanning software engineering, enterprise platforms, and digital transformation.

Organizations today are increasingly adopting AI-driven automation, making words like AI Agents and Agentic AI household terms, oftentimes used interchangeably despite representing fundamentally different approaches to intelligent system design.

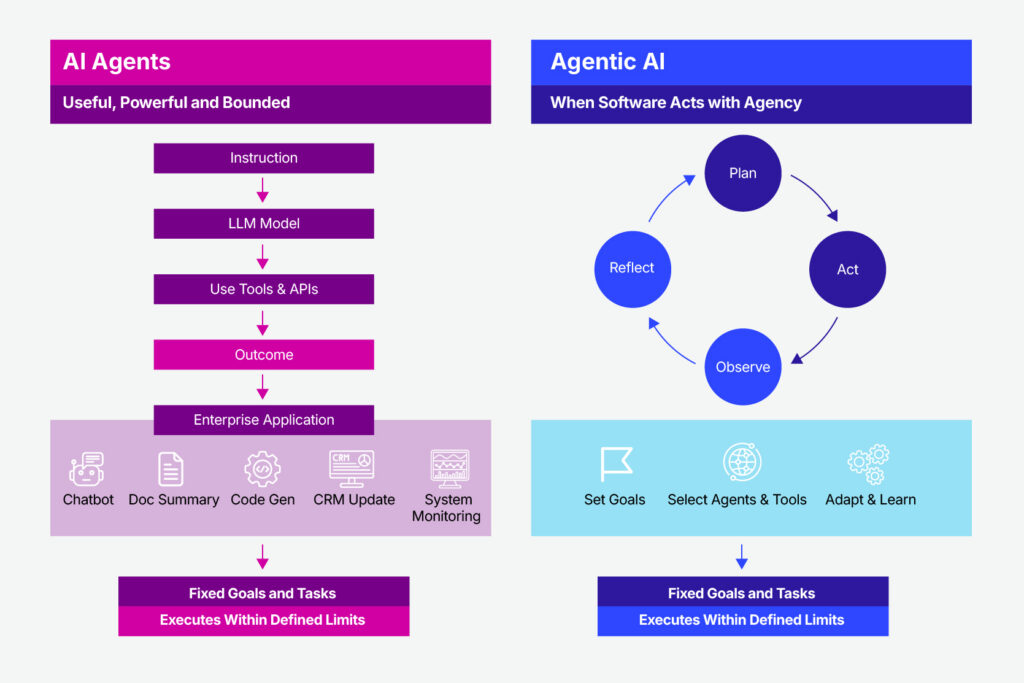

The confusion between the two can cloud critical decision-making around autonomy, decision-making, and system architectures. AI agents typically operate as task-oriented components embedded within predefined workflows, whereas agentic AI denotes systems capable of goal-driven behavior, adaptive planning, and sustained interaction with their environment.

Understanding this distinction is not merely a matter of terminology; it is a prerequisite for making informed architectural and organizational decisions. For technology leaders and engineering teams, clarifying the distinction between AI agents and agentic AI is crucial in designing scalable, governable, and resilient intelligent software systems.

This article examines these concepts from both architectural and practical perspectives, with a focus on their implications for modern software engineering.

Definition of AI Agents and Agentic AI

AI Agent

Within the context of modern software systems, an AI agent is defined as a computational entity designed to perform a specific task (or a bounded set of tasks) by perceiving inputs from its environment and executing predefined actions to achieve an immediate objective.

AI agents typically operate within clearly defined constraints, rely on externally provided goals, and are embedded into larger systems that orchestrate their behavior. Their decision-making capabilities are usually limited to selecting an appropriate action based on the current input, predefined rules, or learned patterns.

From an architectural perspective, AI agents function as components rather than autonomous systems. They are often stateless or maintain only short-term context and depend on external control flows for task sequencing, error handling, and escalation.

Common examples include recommendation engines, conversational bots, data extraction tools, or automated code assistants. While such agents may exhibit limited autonomy at the task level, they do not independently formulate goals, revise strategies over time, or reason beyond the scope of their immediate assignment.

Agentic AI

Agentic AI, by contrast, refers not to a single component but to a class of systems characterized by persistent agency, i.e., the capacity to pursue goals over time through self-directed decision-making, planning, and adaptation.

An agentic AI system is capable of decomposing high-level objectives into actionable steps, selecting and orchestrating tools, monitoring outcomes, and dynamically adjusting its behavior based on feedback from the environment.

A defining property of agentic AI is the presence of internal decision loops. These systems maintain memory across interactions, reason about future states, and re-plan when conditions change or when intermediate results deviate from expectations. Unlike traditional AI agents, agentic AI systems do not merely execute instructions. They determine what actions to take, when to take them, and how to sequence them to achieve a goal.

Architecturally, agentic AI represents a system-level capability rather than an isolated module. It often encompasses multiple specialized agents, shared memory, goal management mechanisms, and governance controls that collectively enable sustained autonomous behavior.

Conceptual Distinction

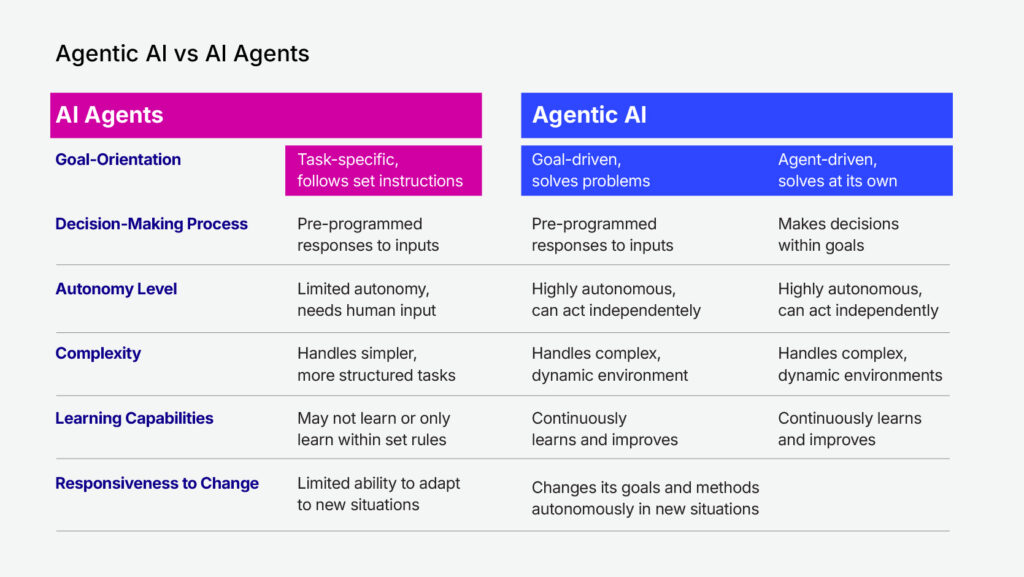

The essential distinction between AI agents and agentic AI lies in the concept of agency. AI agents execute tasks within predefined boundaries, whereas agentic AI systems exhibit goal-driven behavior that unfolds over time.

Recognizing this difference is critical for architects and engineering leaders, as it directly influences system design choices, governance models, and expectations regarding autonomy and responsibility within intelligent software systems.

Architectural Implications of Agency in AI Systems

Beyond terminology, the distinction between AI agents and agentic AI has profound architectural implications that directly affect system scalability, reliability, and governance.

Understanding how agency manifests at the system level helps engineering leaders anticipate design tradeoffs and avoid misalignment between technical capabilities and business expectations.

Control Flow vs. Decision Loops

Traditional AI agents are typically embedded within externally defined control flows. Their execution paths are orchestrated by deterministic pipelines, workflow engines, or application logic that specifies when an agent is invoked, what inputs it receives, and how outputs are handled. In this model, responsibility for sequencing, exception handling, and escalation remains outside the agent itself. As a result, system behavior is largely predictable and easier to audit, but flexibility is limited.

Agentic AI systems invert this pattern by internalizing control logic. Rather than being triggered by a predefined workflow, agentic systems operate through continuous decision loops that evaluate goals, context, and intermediate results. Planning, execution, monitoring, and re-planning occur within the system itself. This architectural shift enables responsiveness and adaptability, but it also introduces non-deterministic behaviors that must be explicitly managed through observability, constraints, and governance mechanisms.

Successfully deploying such systems requires careful architectural planning, tooling strategy, and governance, all of which are central to agentic AI implementation in enterprise environments.

State, Memory, and Temporal Reasoning

Another critical architectural distinction lies in how systems manage state and memory. Many AI agents are stateless or maintain only ephemeral context tied to a single task or interaction. Any long-term memory, if present, is typically externalized in databases or managed by the surrounding application.

Agentic AI systems, by contrast, rely on persistent memory as a first-class capability. This memory allows the system to reason across time, track progress toward goals, and incorporate historical context into decision-making.

Persistent memory enables behaviors such as learning from past outcomes, adjusting strategies, and maintaining continuity across interactions. However, it also raises important considerations around data lifecycle management, consistency, and compliance, particularly in regulated environments.

Tool Orchestration and System Composition

AI agents often interact with a narrow set of tools or APIs that are tightly coupled to their task definition. The orchestration of multiple agents or tools is handled externally through static integration logic.

Agentic AI introduces a more dynamic composition model. Systems can select, sequence, and invoke tools autonomously based on situational needs. This capability allows agentic systems to operate across domains and adapt workflows in real time, but it also increases the complexity of system testing, validation, and failure handling. Engineering teams must design guardrails to prevent unintended tool usage, cascading failures, or inefficient decision paths.

Observability, Accountability, and Risk

As the agency increases, so does the need for transparency. With AI agents, accountability is relatively straightforward: outcomes can usually be traced back to a specific input, rule, or model inference. Agentic AI systems, however, make decisions that unfold over time and may involve multiple interacting components. This makes post-hoc analysis more challenging.

Architectures supporting agentic AI must therefore incorporate enhanced observability, including decision tracing, intent logging, and outcome attribution. These capabilities are essential not only for debugging and optimization but also for meeting organizational requirements around accountability, compliance, and risk management.

As autonomy increases, organizations must address emerging risks related to decision transparency, tool misuse, and control boundaries, including the top agentic AI security threats associated with autonomous systems.

Organizational Impact of Architectural Choices

The choice to introduce agency into AI systems is not purely technical. It reshapes how organizations allocate responsibility between humans and machines. AI agents tend to support human-defined processes, reinforcing existing organizational structures. Agentic AI, by contrast, requires teams to delegate a degree of decision authority to software systems, accompanied by new governance models and escalation paths.

For this reason, many enterprises adopt a hybrid approach, combining task-focused AI agents with a limited agentic layer responsible for coordination and decision-making within carefully defined boundaries. This approach allows organizations to capture the benefits of adaptability while maintaining control over risk and accountability.

When to Use AI Agents and When Agentic AI Is Required

The choice between deploying AI agents and adopting agentic AI systems should be guided by the nature of the problem domain, the required level of autonomy, and the organization’s tolerance for complexity and risk.

In many enterprise scenarios, AI agents provide sufficient value by automating well-defined tasks within stable and predictable workflows. When objectives are narrowly scoped, decision paths are largely deterministic, and strong requirements for auditability or human oversight exist, task-oriented AI agents represent a pragmatic and cost-effective solution.

Agentic AI, however, becomes necessary when software systems are expected to operate in dynamic environments where goals evolve over time or cannot be fully specified in advance, such as complex operational platforms or agentic AI healthcare use cases, where systems must continuously adapt to patient data, workflows, and regulatory constraints.

In such contexts, the coordination of multiple tasks, tools, and data sources through static orchestration mechanisms often proves inadequate. Agentic AI systems address this limitation by introducing internal decision-making loops that enable continuous planning, prioritization, and adaptation based on changing conditions.

| Decision Dimension | AI Agents | Agentic AI |

|---|---|---|

| Primary role | Execute tasks | Pursue outcomes |

| Autonomy level | Limited | High (with constraints) |

| Who sets priorities | Humans only | The system can adjust priorities |

| Ability to plan | None or minimal | Built-in planning and re-planning |

| Response to change | Fails or escalates | Adapts strategy |

| Predictability | High | Medium |

| Governance effort | Low | High |

| Risk exposure | Well-understood | Requires active risk management |

| Time to value | Short | Medium to long |

| Operational complexity | Low | High |

| Best use cases | Stable, repeatable processes | Dynamic, evolving environments |

| Executive mindset required | Automation | Delegation with controls |

From a business perspective, the adoption of agentic AI is justified when the cost of manual orchestration, rule-based automation, or human intervention exceeds the operational and governance overhead introduced by autonomous decision-making.

Examples include complex operational platforms, adaptive customer engagement systems, or multi-domain digital workflows where responsiveness and scalability are critical. At the same time, organizations must account for the increased demands agentic AI places on system observability, risk management, and organizational accountability.

Consequently, selecting between AI agents and agentic AI is not a binary decision but a strategic architectural choice. Technology leaders must balance immediate efficiency gains against long-term system adaptability, ensuring that the chosen approach aligns with both technical constraints and business objectives.

The Strategic View

AI agents primarily deliver efficiency by accelerating execution within clearly defined boundaries. Agentic AI, by contrast, introduces adaptability by enabling systems to reassess goals and adjust behavior over time.

These approaches are not mutually exclusive and are likely to coexist across enterprise systems for the foreseeable future. In practice, the most effective architectures combine multiple specialized agents with a limited agentic layer responsible for coordination and decision-making.

In our work with enterprise clients, Svitla Systems increasingly observes a shift in architectural discussions, from whether AI agents should be adopted to where and to what extent agency should be introduced.

This shift reflects broader agentic AI trends, where enterprises are increasingly focused on balancing autonomy, governance, and long-term system resilience. It also reflects a growing need to recognize the strategic challenge of deliberately allocating autonomy within software systems. The organizations that navigate this balance effectively will shape the next generation of intelligent, resilient software platforms.

Conclusion

The growing interest in intelligent software systems has brought renewed attention to the concepts of AI agents and agentic AI, often without sufficient distinction between them.

As this article has demonstrated, these approaches represent fundamentally different design philosophies rather than incremental variations of the same idea. AI agents excel at executing well-defined tasks within controlled boundaries, making them highly effective for efficiency-driven automation in enterprise environments. Agentic AI, in contrast, introduces a system-level capability for goal-driven behavior, adaptive planning, and sustained autonomy over time.

Understanding this distinction is critical for technology leaders, architects, and product teams tasked with designing systems that must balance efficiency, adaptability, and governance.

The decision to introduce agency into software systems carries architectural, organizational, and risk-related implications that extend far beyond model selection or tooling choices. Rather than treating agentic AI as a universal upgrade, organizations should approach it as a strategic capability to be applied selectively and deliberately.

Ultimately, the future of intelligent software will not be defined by whether AI is used, but by how autonomy is allocated within systems. Enterprises that develop a clear mental model of agency (and apply it with discipline) will be better positioned to build resilient, scalable, and trustworthy AI-enabled platforms.