Apache Spark is an open-source framework for implementing distributed processing of unstructured and semi-structured data, part of the Hadoop ecosystem of projects. Spark works in the in-memory computing paradigm: it processes data in RAM, which makes it possible to obtain significant performance gains for some types of tasks. In particular, the ability to repeatedly access user data loaded into memory makes the library attractive for machine learning algorithms.

Spark performs in-memory computations to analyze data in real-time. Previously, Apache Hadoop MapReduce only performed batch processing and did not have real-time processing functionality. As a result, the Apache Spark project was introduced because it can do real-time streaming and can also do batch processing. The Spark project provides programming interfaces for the languages Java, Scala, Python, and R. Originally written in Scala, it later added a significant part of the Java code to provide the ability to write programs directly in Java.

It consists of a core and several extensions such as:

- Spark SQL (allows you to execute SQL queries on data)

- Spark Streaming (streaming data add-on)

- Spark MLlib (set of machine learning libraries)

- GraphX (designed for distributed graph processing).

Spark It can work both in a Hadoop cluster environment running YARN, and without Hadoop core components, supports several distributed storage systems: HDFS, OpenStack Swift, NoSQL-DBMS Cassandra, Amazon S3.

PySpark library

The PySpark library was created with the goal of providing easy access to all the capabilities of the main Spark system and quickly creating the necessary functionality in Python. This eliminates the need to compile Java code and the speed of the main functions remains the same. PySpark, as well as Spark, includes core modules: SQL, Streaming, ML, MLlib and GraphX.

PySpark Resilient Distributed Dataset.

A Resilient Distributed Dataset (RDD) is the basic abstraction in Spark. It represents an immutable, partitioned collection of elements that can be operated in parallel. These are the elements that run and operate on multiple nodes to do parallel processing on a cluster. To perform computation on a Resilient Distributed Dataset, you can use the Transformation or Action approach:

- Transformation. These are the operations, which are applied on RDD to create a new RDD. Filter, groupBy, and map are examples of transformations.

- Action. These are the operations that are applied on RDD, which instructs Spark to perform computation and send the results back to the driver.

PySpark SQL

PySparkSQL is used to create a DataFrame and includes classes such as:

- SparkSession is the entry point for creating a DataFrame and using SQL functions.

- DataFrame is a distributed set of data grouped into named columns.

- Column expression in the DataFrame.

- Row of data in a DataFrame.

- GroupedData are the aggregation methods returned by DataFrame.groupBy ().

- DataFrameNaFunctions - methods for handling missing data (Nan values).

- DataFrameStatFunctions - methods for statistical data processing.

- functions - a list of built-in functions available for the DataFrame.

- types - a list of available data types.

- Window for working with window functions.

PySpark STREAMING

The Streaming module provides access to streaming functionality and is an extension of the core Spark API that allows Data Scientists to process data in real time from a variety of sources, including but not limited to Kafka, Flume, and Amazon Kinesis. This processed data can be sent to file systems, databases or dashboards. Streaming is based on DStream (Discretized Stream), which represents a stream of data divided into small RDD packets. These packages can be integrated with any other Spark component like MLlib.

PySpark ML and MLlib

PySpark has two similar modules for Machine Learning - ML and MLlib. They differ only in the type of data construction: ML uses DataFrame, and MLlib uses RDD. Since the DataFrame is more convenient to use, Spark developers recommend using the ML module. Machine learning modules are rich in different tools, and the interface is similar to another popular Python library for Machine Learning - Scikit-learn. Here are the main tools:

- Pipeline, which creates the stages of modeling;

- Data extraction using Binarizer, MinMaxScaler, CoutVectorizer, Word2Vec and more then 50 other classes;

- Classification, including logistic regression, decision trees, random forests, etc. There are about 20 in total;

- Clustering of dozen algorithms such as k-means, Latent Dirichlet Allocation (LDA);

- Regression - linear (linear regression), decision trees and about 20 more regression algorithms.

GraphX

In modern information systems and modeling systems, graphs are used more and more to establish relationships between entities and objects. The size of such graphs can reach enormous sizes, for example, hundreds of millions of nodes with complex relationships between them. This is why PySpark created a very fast in-memory library for graph computation named GraphX.

As mentioned in PySpark documentation: “GraphX is a new component in Spark for graphs and graph-parallel computation. At a high level, GraphX extends the Spark RDD by introducing a new Graph abstraction: a directed multigraph with properties attached to each vertex and edge. To support graph computation, GraphX exposes a set of fundamental operators (e.g., subgraph, joinVertices, and aggregateMessages) as well as an optimized variant of the Pregel API. In addition, GraphX includes a growing collection of graph algorithms and builders to simplify graph analytics tasks.”

Installation

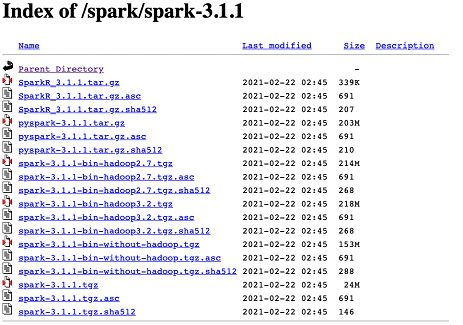

To install PySpark on your computer, you need to download the Spark version from the Apache official website. You can check the availability of the new version of the installer in the directory of the Apache download server:

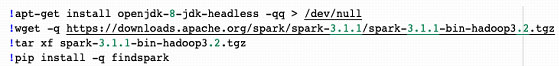

Next, use apt-get install to install OpenJDK. You will also need the findspark Python library to install PySpark. Try the installation in the Google Colab environment. This cloud Jupyter notebook is perfectly suitable to work with PySpark.

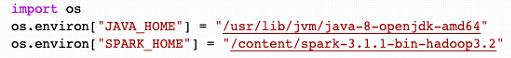

Next, set two environment variables so that PySpark can start successfully.

Testing PySpark on Google Colab

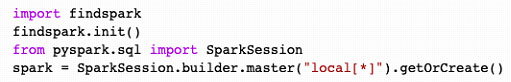

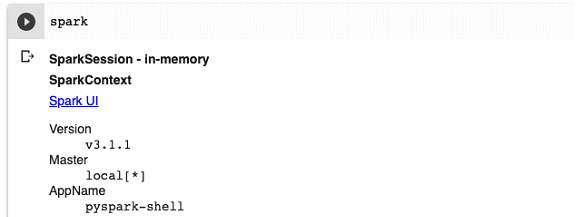

To verify the PySpark installation on Google Colab, import the findspark library and create a spark session.

Then, to simply print the spark variable, you will have the following output:

I.e. PySpark is installed and configured correctly.

Simple PySpark example on Google Colab

Let’s take the simplest computational example in standard Python without PySpark. This code finds the maximum in the data for the second column.

data = [(1., 8.), (2., 21.), (3., 7.), (4., 7.), (5., 11.)]

max = data[0][1] # initiate search with first element

for i in data:

if max < i[1]:

max = i[1]

print(max)

Code language: PHP (php)You will get the following output from this code

21.0Code language: CSS (css)Now, let's see how this example can work in PySpark. Note that to get a real speedup, you will need to run a really large array of input data.

from pyspark.sql.functions import max

from pyspark.sql import SQLContext

from pyspark import SparkContext

sc = SparkContext.getOrCreate()

sqlContext = SQLContext(sc)

df = sqlContext.createDataFrame([(1., 8.), (2., 21.), (3., 7.), (4., 7.), (5., 11.)], ["First", "Second"])

df.show()

result = df.select([max("Second")])

result.show()

print (result.collect()[0]['max(Second)'])

Code language: JavaScript (javascript)This will generate the following output.

+-----+------+

|First|Second|

+-----+------+

| 1.0| 8.0|

| 2.0| 21.0|

| 3.0| 7.0|

| 4.0| 7.0|

+-----+------+

+-----------+

|max(Second)|

+-----------+

| 21.0|

+-----------+

21.0

Here, you will be able to see that the result is the same as in the previous code sample. Of course, to achieve faster computation time compared to the usual approach, PySpark needs a lot of data like millions of elements in an array. In this large case, it will be really fast compared to standard methods. To run PySpark on the cluster of computers, please refer to the “Cluster Mode Overview” documentation.

Conclusion

This article is a robust introduction to the PySpark area, and of course, you can search for more information as well as detailed examples to explore in this resource. From the simplest example, you can draw these conclusions:

- Spark is an excellent system for in-memory computing

- PySpark is easy enough to install on your system

- The threshold for entering the system is not very high. You can figure it out quickly as the system is well documented with sufficient documentation and tutorials, and there is a large community.

Our Svitla Systems specialists have extensive experience using Spark, PySpark, and other high-performance systems. To increase the speed of your project, especially when it comes to processing large amounts of data, contact us, so we can start outlining the best solution for you. We also perform other IT solutions such as web development, mobile development, machine learning systems, artificial intelligence, software testing, and so on. With Svitla Systems, rest assured that your projects are in expert hands and with prompt execution, all wrapped up in a long-term, nurturing cooperation.