Building upon the foundational concepts of IAM, this post delves into advanced strategies, additional AWS security tools, and real-world scenarios to further enhance your AWS database security posture.

Real-World Examples: IAM in Action

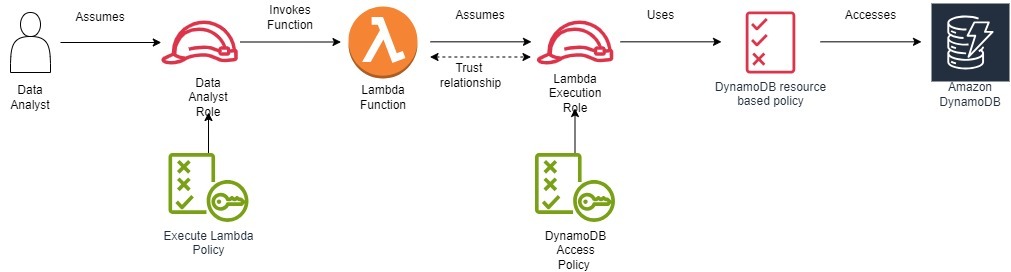

Scenario 1. Secure Data Analytics Pipeline

A data analyst needs to query data from a DynamoDB table and process it using a Lambda function.

Solution:

1. Create Lambda Execution Role: Create an IAM role specifically for the Lambda function. This role should be the only entity allowed to be assumed by the Lambda function and access DynamoDB.

2. Attach Policy to Lambda Execution Role: Attach a policy to the Lambda execution role, granting it the necessary permissions to access the DynamoDB table. For example:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"dynamodb:GetItem",

"dynamodb:Scan"

],

"Resource": "arn:aws:dynamodb:<region>:<account-id>:table/<DynamoDB-table-name>"

}

]

}Code language: JSON / JSON with Comments (json)3. Create Data Analyst Role: Create an IAM role for the data analyst with restricted permissions. This role should not have direct access to DynamoDB.

4. Attach Policy to Data Analyst Role: Attach a policy to the data analyst's role that allows to invoke Lambda function.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "lambda:InvokeFunction",

"Resource": "arn:aws:lambda:<region>:<account-id>:function:<Lambda-function-name>"

}

]

}

Code language: JSON / JSON with Comments (json)5. Lambda Configuration: Configure the Lambda function to use the Lambda execution role.

6. Trust Relationships:

a. Lambda Execution Role Trust Relationship: The Lambda execution role's trust policy should explicitly allow this Lambda function to assume it.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": { "Service": "lambda.amazonaws.com" },

"Action": "sts:AssumeRole"

}

]

}Code language: JSON / JSON with Comments (json)b. Data Analyst Role Trust Relationship: There is no trust relationship between the data analyst's role and the Lambda execution role. The data analyst should only be able to invoke the Lambda function.

Restrict Console Access: To prevent the data analyst from executing the Lambda function through the AWS Management Console, use the aws:ViaAWSService condition in the policy. This allows the data analyst to invoke the function via API/CLI but blocks access through the console.

Key Points:

- Separation of Roles: The data analyst has a separate role with limited permissions, while the Lambda function has a dedicated role for accessing DynamoDB. This separation of duties is crucial for security.

- Trust Relationships: The Lambda execution role has a trust relationship with Lambda, allowing Lambda to assume the execution role.

IAM policies can incorporate condition keys, enabling you to define dynamic access controls based on factors like time of day, IP address, or even the user's department. This allows for highly customized and context-aware access management. Example condition keys include aws:SourceIp, aws:CurrentTime, and aws:PrincipalOrgID.

To enhance security and ensure that the Lambda execution role accesses only the intended DynamoDB table, we can use IAM condition keys based on resource tags.

Steps:

- Tag DynamoDB Tables:

- customer_data Table: Tag with AccessLevel=Public.

- sensitive_customer_data Table: Tag with AccessLevel=Sensitive.

- Update Lambda Execution Role Policy:

- Allow Access to Public Data:

{

"Effect": "Allow",

"Action": [

"dynamodb:GetItem",

"dynamodb:Query",

"dynamodb:Scan",

"dynamodb:PutItem",

"dynamodb:UpdateItem",

"dynamodb:DeleteItem"

],

"Resource": "arn:aws:dynamodb:<region>:<account-id>:table/*",

"Condition": {

"StringEquals": { "dynamodb:ResourceTag/AccessLevel": "Public" }

}

}

Deny Access to Sensitive Data:

{

"Effect": "Deny",

"Action": "dynamodb:*",

"Resource": "arn:aws:dynamodb:<region>:<account-id>:table/*",

"Condition": {

"StringEquals": { "dynamodb:ResourceTag/AccessLevel": "Sensitive" }

}

}Code language: JavaScript (javascript)By using resource tags and condition keys, we enforce that the Lambda function can only access tables intended for it and is explicitly denied access to sensitive tables.

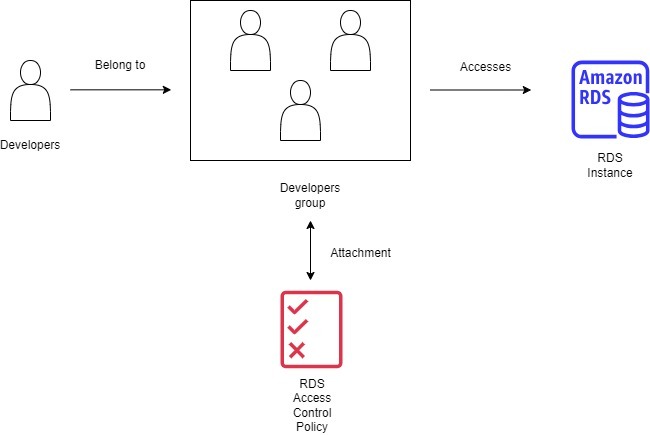

Scenario 2. Secure Database Access for Application Developers

A team of developers needs read-only access to a specific Amazon RDS (Relational Database Service) instance for testing purposes. The goal is to provide them with the necessary access while maintaining the database's security and integrity.

Solution:

- Create IAM Role for Developers:

- Role Name:

RDSReadOnlyRole - Purpose: Grants developers read-only access to the specific RDS instance.

- Role Name:

- Define the IAM Policy: Create a custom IAM policy that allows read-only access to the RDS instance. This policy will be called

RDSReadOnlyPolicy.

Policy Document example:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "DescribeDBInstances",

"Effect": "Allow",

"Action": [

"rds:DescribeDBInstances",

"rds:ListTagsForResource"

],

"Resource": "*"

},

{

"Sid": "ConnectToRDSInstance",

"Effect": "Allow",

"Action": "rds-db:connect",

"Resource": "arn:aws:rds-db:<region>:<account-id>:dbuser:<dbi-resource-id>/*"

}

]

}Code language: JSON / JSON with Comments (json)- Create an IAM Group for Developers:

- Group Name: DevelopersGroup.

- Purpose: Organizes developer IAM users for easier management.

- Attach the Policy to the IAM Group

- Attach the RDSReadOnlyPolicy to the DevelopersGroup.

- This grants all users in the group the permissions defined in the policy.

- Add Developers to the IAM Group

- Add all developer’s IAM users and roles to the DevelopersGroup.

- Enable IAM Database Authentication on RDS

- Modify the RDS Instance:

- Go to the RDS console.

- Select the specific RDS instance.

- Choose Modify.

- Under Database Authentication, select "Password and IAM database authentication".

- Apply changes (may require a database reboot).

- Modify the RDS Instance:

- Create a Database User for IAM Authentication

- Connect to the RDS Database as Admin.

- Create a Read-Only Database User:

For MySQL:

CREATE USER 'dev_readonly_user'@'%' IDENTIFIED

WITH AWSAuthenticationPlugin AS 'RDS';

GRANT SELECT ON *.*

TO 'dev_readonly_user'@'%';Code language: PHP (php)For Postgres:

CREATE ROLE dev_readonly_user WITH LOGIN;

GRANT CONNECT ON DATABASE <database-name> TO dev_readonly_user;

GRANT USAGE ON SCHEMA public TO dev_readonly_user;

GRANT SELECT

ON ALL TABLES IN SCHEMA public TO dev_readonly_user;

ALTER DEFAULT PRIVILEGES IN SCHEMA public GRANT

SELECT

ON TABLES TO dev_readonly_user;Code language: PHP (php)8. Developers Generate an Authentication Token

- Developers use the AWS CLI or AWS SDK to generate a temporary authentication token.

- AWS CLI Command:

aws rds generate-db-auth-token \

--hostname <db-endpoint> \

--port <db-port> \

--region <region> \

--username dev_readonly_userCode language: HTML, XML (xml)9. Developers Connect to the RDS Instance

- MySQL Connection Example:

psql "host=<db-endpoint> port=5432 sslmode=verify-full

sslrootcert=/path/to/rds-combined-ca-bundle.pem user=dev_readonly_user

password=<authentication-token>"Code language: HTML, XML (xml)10. Restrict Access with Security Groups

Modify the RDS Security Group:

- Allow inbound traffic on the database port from the developers’ IP addresses or VPC subnets.

- Example Inbound Rule:

- Type: MySQL/Aurora or PostgreSQL

- Protocol: TCP

- Port Range: 3306 or 5432

- Source: Developers’ IP range (e.g., 203.0.113.0/24)

11. Implement Conditional Access with IAM Condition Keys

Enhance Security by Adding Conditions to the Policy:

{

"Effect": "Allow",

"Action": "rds-db:connect",

"Resource": "arn:aws:rds-db:<region>:<account-id>:dbuser:<dbi-resource-id>/*",

"Condition": {

"DateGreaterThan": {

"aws:CurrentTime": "2023-10-01T08:00:00Z"

},

"DateLessThan": {

"aws:CurrentTime": "2023-10-01T18:00:00Z"

},

"IpAddress": {

"aws:SourceIp": "203.0.113.0/24"

}

}

}Code language: JSON / JSON with Comments (json)This policy allows connections only during business hours (8AM to 6PM UTC) and restricts access to specific IP addresses.

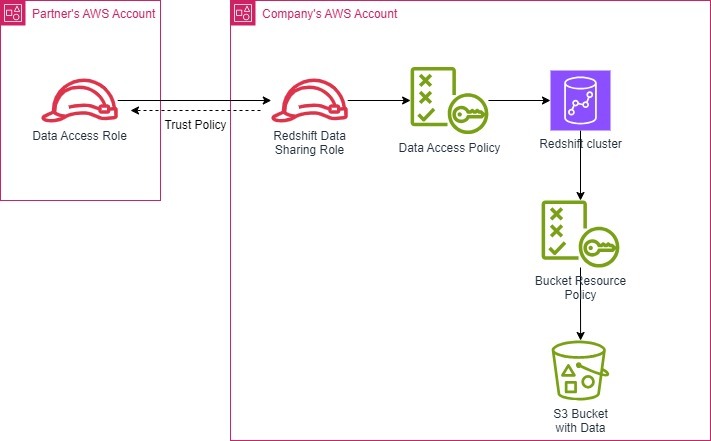

Scenario 3. Secure Data Sharing with External Partners

A company needs to share data from a Redshift cluster with an external partner.

Solution:

1. Redshift Data Sharing: Utilize Redshift data sharing to grant access to the partner's AWS account. This process involves setting up a data sharing arrangement between the company’s account and the partner’s account. This process can be done by creating a Trust Relationship between the company’s account (the “data provider”) and the partner’s account (the “data consumer”). It establishes a secure channel that allows the partner to access the shared data without requiring direct access to the company’s account.

Implementation Steps:

IAM Role Creation: The company creates a dedicated IAM role in its account specifically for data sharing with the partner. This role is called the “redshift-data-sharing-role” or “redshift_data_sharing_role”. This role name should not include spaces.

Trust Policy: The company then attaches a trust policy to this role. The trust policy defines which entities (IAM users, roles, or AWS services) can assume the role. In the case of data sharing, the trust policy allows the partner’s account’s root user or a designated IAM role within the partner account to assume the Redshift Data Sharing Role.

Example Trust Policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": { "AWS": "arn:aws:iam::<partner-account-id>:root" },

"Action": "sts:AssumeRole",

"Condition": {

"StringEquals": { "sts:ExternalId": "my-data-sharing-id" }

}

}

]

}Code language: JSON / JSON with Comments (json)Data Sharing Configuration: The company configures the Redshift Data Sharing settings to grant access to the partner’s account. This involves associating the Redshift Data Sharing Role with the shared data.

Partner Access: The partner can then assume the Redshift Data Sharing Role using the AWS Security Token Service (STS). This grants them temporary access to the shared data in the Redshift cluster.

2. Attach Policy: Implement IAM policies to control which tables the partner can access and what actions they can perform. The IAM policy attached to the Redshift Data Sharing Role will define these permissions, ensuring that the partner can only access the shared data and limited actions (e.g., read-only) as needed.

3. Secure S3 Buckets: In the case of using Redshift Spectrum feature, ensure S3 buckets accessed by Redshift Spectrum are secured with IAM policies. This is important because Redshift Spectrum relies on S3 service to store and query data. The IAM policies on the S3 bucket must restrict access to only the services (e.g., Redshift Spectrum) that need to interact with the bucket. Example policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": { "AWS": "arn:aws:iam::<partner-account-id>:root" },

"Action": [

"redshift:DescribeClusters",

"redshift:DescribeTables",

"redshift:Select"

],

"Resource": [

"arn:aws:redshift:<region>:<account-id>:cluster:<cluster-name>",

"arn:aws:redshift:<region>:<account-id>:table:<cluster-name>.<schema-name>.<table-name>"

]

}

]

}Code language: JSON / JSON with Comments (json)

Conclusion: Elevating Your Database Security Posture

Mastering IAM is essential for securing your AWS databases and protecting your valuable data. By understanding IAM's advanced features, implementing best practices, and leveraging real-world examples, you can build a robust security framework that safeguards your data from unauthorized access and ensures compliance with industry standards. Remember, security is an ongoing process, requiring continuous monitoring, auditing, and adaptation to evolving threats.

Additional Tools and Services for Enhanced Security

Beyond the foundational elements of IAM, AWS offers a robust suite of tools and services designed to fortify your database security posture. Let's delve into the implementation steps, potential use cases, and associated pricing for each of these powerful tools, with a focus on technical considerations and practical insights:

1. AWS Security Hub

Purpose: Security Hub is your centralized security operations center, aggregating findings from various AWS services (including IAM) and third-party integrations. This consolidated view streamlines your security management and allows for efficient remediation of vulnerabilities.

Implementation Steps:

- Enable Security Hub: Activate Security Hub within your AWS account.

- Select Standards: Choose from AWS-managed security standards (e.g., CIS AWS Foundations Benchmark) or create custom standards based on your organization's specific compliance requirements and risk tolerance.

- Configure Automated Actions: Utilize Amazon EventBridge to orchestrate automated responses to Security Hub findings. For instance, automatically isolate a non-compliant EC2 instance or trigger an AWS Lambda function to remediate security issues dynamically.

Pricing: Security Hub offers a 30-day free trial. Subsequently, pricing is tiered based on the number of findings recorded and the type of insights enabled (e.g., threat intelligence insights). This model enables you to scale your security operations efficiently based on your organization's size and security needs.

Engineering Use Cases:

- Compliance Automation: Implement continuous monitoring and automated remediation to ensure adherence to security standards such as PCI DSS, HIPAA, or SOC 2.

- Vulnerability Management: Prioritize remediation based on severity and risk, allowing your team to focus on the most critical vulnerabilities first.

- Incident Response Orchestration: Streamline incident response workflows by integrating SIEM tools and automated remediation playbooks.

2. AWS GuardDuty

Purpose: GuardDuty is an intelligent threat detection system continuously monitoring your AWS environment for abnormal activity. It detects potential security threats by leveraging machine learning algorithms and threat intelligence feeds, enabling proactive security incident response.

Implementation Steps:

- Enable GuardDuty: Activate GuardDuty within your AWS account.

- Fine-Tune Findings: Regularly review GuardDuty's findings, classified by severity (low, medium, high). Adjust detector sensitivity and create custom threat detection rules based on your specific environment and risk profile.

- Automate Incident Response: Implement AWS Lambda functions or AWS Step Functions state machines to trigger automated responses to GuardDuty findings, such as isolating compromised resources or disabling compromised IAM users.

Pricing: GuardDuty offers a 30-day free trial. Following the trial, costs are calculated based on the volume of data analyzed (e.g., CloudTrail events, VPC Flow Logs, DNS logs) and the number of findings generated. Consider your organization's data volume and the expected number of security events when estimating costs.

Engineering Use Cases:

- Anomaly Detection: Identify unusual API call patterns, unauthorized data access attempts, and potential malware infections within your AWS environment.

- Threat Hunting: Proactively search for Indicators of Compromise (IoCs) and investigate suspicious activities.

- Compromise Assessment: Rapidly assess the scope of a security incident and contain potential breaches.

3. AWS Key Management Service (KMS)

Purpose: KMS provides a secure and scalable platform for managing encryption keys. Integrating with AWS Secrets Manager, KMS enables you to encrypt and decrypt database credentials and other sensitive data with fine-grained access controls.

Implementation Steps:

- Create KMS Key: Generate a KMS customer-managed key (CMK) specifically for encrypting and decrypting database credentials.

- Define Key Policies: Implement IAM policies to enforce least privilege access to the KMS key, ensuring that only authorized users and services can interact.

- Integrate with Secrets Manager: Configure Secrets Manager to utilize your KMS key to encrypt and decrypt database credentials transparently.

Pricing: Costs are incurred based on the number of KMS keys you create and the number of requests made to KMS (e.g., encryption, decryption, key generation). Optimize your key management strategy to minimize unnecessary key creation and API calls.

Engineering Use Cases:

- Data Protection: Encrypt database credentials, API keys, and other sensitive configuration data to prevent unauthorized access.

- Compliance: Implement cryptographic controls in line with industry standards and regulatory requirements.

- Key Rotation: Automate key rotation to reduce the impact of compromised keys.

4. AWS Secrets Manager

Purpose: Secrets Manager is a secure repository for storing and managing database credentials and other confidential information. It integrates seamlessly with IAM and KMS, offering centralized control over your sensitive data.

Implementation Steps:

- Create a Secret: Store your database username, password, and other configuration data as a secret in Secrets Manager.

- Attach IAM Policies: Implement granular IAM policies to restrict access to secrets based on the principle of least privilege.

- Integrate with Applications: Utilize the Secrets Manager SDK or API to retrieve secrets securely for your applications, avoiding the need to hardcode sensitive credentials.

Pricing: Costs are associated with the number of secrets you store and the number of API calls made to Secrets Manager. Consider your organization's secret management needs when estimating costs.

Engineering Use Cases:

- Credentials Management: Centrally store and manage database passwords, API keys, and other sensitive information.

- Secrets Rotation: Automatically rotate secrets to reduce the risk of unauthorized access due to compromised credentials.

- Auditing: Track who accesses your secrets and when providing valuable insights for security investigations.

By leveraging this comprehensive suite of AWS security tools and services, you can establish a robust defense-in-depth strategy for your database infrastructure.

Continuous Improvement

The AWS security landscape constantly evolves, with new features, threats, and best practices emerging regularly. To maintain a robust security posture, staying abreast of these developments is crucial. Here are some valuable resources to help you stay ahead of the curve:

- AWS Training and Certification: AWS offers a wide range of training courses and certifications designed to deepen your understanding of cloud security. Consider pursuing certifications like the AWS Certified Security — Specialty to validate your expertise.

- Svitla Seminars and Blog Posts: Svitla Systems regularly hosts informative seminars and publishes insightful blog posts on AWS security best practices, emerging technologies, and real-world implementation case studies. These resources can provide valuable insights and practical guidance for enhancing your security strategy.

- Industry Conferences and Webinars: Participate in industry conferences and webinars focused on cloud security to gain exposure to the latest trends, techniques, and tools.

By proactively engaging with these resources and staying informed about the latest advancements in AWS security, you can ensure that your database infrastructure remains resilient, compliant, and protected against evolving threats. Remember, security is not a destination but a continuous learning, adaptation, and improvement journey.

![[Article cover] AWS database security with IAM](https://svitla.com/wp-content/uploads/2024/10/Article-cover-AWS-database-security-with-IAM-scaled-560x310.jpg)