Artificial Intelligence adoption has reached an inflection point: 78% of organizations now use AI in at least one business function, and 71% are already experimenting with generative AI. Yet despite this momentum, transformation hasn’t followed adoption, and there are still plenty of myths around the AI bubble.

According to MIT NANDA’s State of AI in Business 2025, 95% of enterprise AI initiatives deliver zero measurable ROI, creating what researchers call the GenAI Divide, a widening gap between experimentation and scalable value. This divide underscores a reality that business leaders can no longer ignore: AI success isn’t just about having the latest models. It’s about organizational readiness.

From data quality and infrastructure resilience to workforce skills, governance, and change management, readiness determines whether AI becomes a strategic advantage or an expensive science project.

As such, in 2025 and in the upcoming, immediate years, leading companies are and will no longer merely “pilot” AI. They are now focused on rewiring operations, governance, and talent models to embed AI into their decision-making fabric.

The latest data shows that firms where the CEO personally oversees AI governance report the strongest financial outcomes, while those without top-down alignment struggle to scale solutions beyond isolated use cases.

For organizations still unsure where to begin, a structured AI readiness checklist can transform uncertainty into action, helping assess foundational capabilities (technology, data, skills, ethics, and adaptability) before scaling enterprise-level AI programs.

Whether you’re a startup exploring predictive analytics or a global enterprise integrating generative AI across customer and operational functions, AI readiness is your competitive differentiator. In the sections ahead, we’ll explore what readiness really means in 2025 and beyond, unpack the pillars that define it, and provide a practical checklist for assessing your organization’s ability to harness AI responsibly, effectively, and profitably.

What Is AI Readiness and Why It Matters in 2025

AI readiness is an organization’s ability to consistently derive measurable business value from artificial-intelligence initiatives, without sacrificing security, governance, or agility. It’s the equilibrium between strategic alignment, operational capability, data integrity, and change resilience.

By 2025, nearly eight in ten companies will have adopted AI in at least one function, and more than 70% will be experimenting with generative AI. Yet MIT warns that 95% of enterprise AI programs still fail to reach production or deliver ROI, largely because organizations aren’t structurally prepared to scale them.

The divide between adoption and transformation is widening: McKinsey data show that only 21% of companies have redesigned workflows to integrate AI effectively, while fewer than one-third follow recognized scaling practices such as KPI tracking, governance road-mapping, or cross-functional change management. Those that do report a stronger EBIT impact prove that readiness directly correlates with bottom-line results.

AI readiness ensures that organizations:

- Align leadership and governance. CEO-level oversight of AI governance delivers the highest financial impact among all deployment variables.

- Mitigate risk and reinforce trust. Mature adopters are now centralizing risk and compliance, with over 57% using a hub-and-spoke model for AI governance to manage privacy, IP, and explainability.

- Balance ambition with accountability. Leaders are shifting from isolated pilots toward enterprise-level transformation, embedding feedback loops, human-in-the-loop validation, and measurable ROI tracking.

- Develop adaptive talent. Half of AI-using companies plan to reskill significant portions of their workforce within three years, turning readiness into a people strategy as much as a tech initiative.

The Eight Pillars of AI Readiness

True AI readiness is built on a foundation of eight interconnected pillars. Together, they determine how effectively an organization can design, deploy, govern, and scale AI in ways that create measurable business impact.

1. Technology infrastructure

A scalable, cloud-native foundation is non-negotiable. Modern AI workloads demand elastic compute, secure APIs, and data pipelines capable of handling large-volume, low-latency interactions. According to McKinsey, companies that redesign their workflows and modernize infrastructure are 2x as likely to report EBIT gains from AI adoption.

Leaders are moving from fragmented systems toward platformized architectures that centralize experimentation, deployment, and monitoring, reducing model drift and speeding iteration cycles.

2. Data management and quality

Data is AI’s fuel, but readiness depends on how clean, connected, and contextualized that data is. Fully centralized data governance correlates with stronger trust and compliance outcomes. What does this look like in practice? Instituting metadata standards, lineage tracking, and privacy-by-design frameworks across the data lifecycle.

3. Skilled workforce

AI maturity isn’t just about algorithms. It’s about people who know how to use them. Half of AI-adopting organizations expect to reskill portions of their workforce within three years, with growing demand for data engineers, ML specialists, and AI-literate business leaders.

Training should extend beyond technical roles: the most successful enterprises are embedding AI fluency into every business function to close the understanding gap between builders and users.

4. Governance and ethics

AI governance has become a board-level priority, with 28% of organizations reporting that their CEO personally oversees AI governance, and as established, this top-down accountability is directly linked to higher ROI.

5. Security and privacy

Generative AI introduces new vectors of risk, from model poisoning to data leakage. Organizations are increasingly centralizing cybersecurity and data-privacy controls, with 57% managing them through a single “hub” model. Security-ready companies integrate continuous threat monitoring, zero-trust architectures, and clear privacy disclosures to protect both proprietary and customer data.

6. Cross-functional collaboration

AI readiness thrives where silos die. Organizations with strong collaboration between technical and operational teams, where IT, product, and business users co-design solutions, achieve greater success rates than those building AI tools in isolation. Readiness means embedding cross-disciplinary squads, establishing shared metrics, and ensuring that insights flow freely across departments.

7. Budget and ROI evaluation

Budget allocation reveals readiness maturity. Over 50% of GenAI budgets still go to visible but low-ROI functions like marketing, while back-office automation, which yields faster payback, is often underfunded. Organizations ready for the next AI wave set clear ROI frameworks, track performance indicators (e.g., productivity, customer satisfaction, cost reduction), and continuously re-prioritize investments based on value creation, not hype.

8. Change management and adoption

AI success hinges on adoption, not algorithms. Change-management excellence and senior-leader engagement are identified as top predictors of measurable AI impact. Readiness involves transparent communication, employee empowerment, and the creation of internal champions who model responsible AI use.

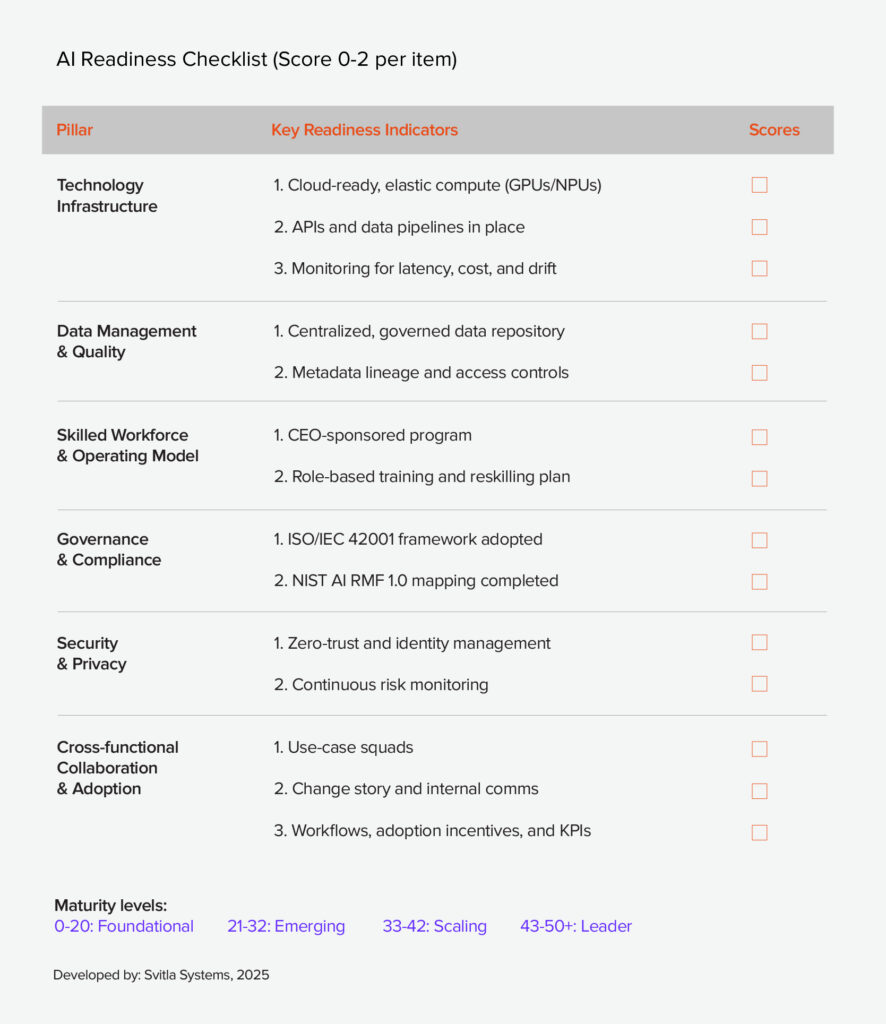

AI Readiness Checklist (2025)

How to score:

- For each item, assign 0–2.

- 0 = not in place

- 1 = partially/in progress

- 2 = fully in place and working at scale

Benchmarks: Companies showing CEO-level AI governance, redesigned workflows, and adoption/scaling practices see measurably higher EBIT impact from AI; these are the highest-leverage items to prioritize first.

Technology infrastructure

- Cloud-ready, elastic compute for AI workloads (e.g., GPUs/NPUs, autoscaling, budget guardrails). 0–2.

Why: AI value correlates with workflow redesign + platformization; infra is the enabler.

- Standardized APIs & event/data pipelines connecting apps, data stores, and models. 0–2.

- Environment promotion path (dev → staging → prod) with CI/CD for models/agents. 0–2.

- Observability for AI systems (latency, throughput, error budgets, unit economics per request). 0–2.

- Cost visibility (per-feature/per-call costing and budgets by BU). 0–2.

Quick win: Stand up a unified model gateway with usage metering to control spend while you scale.

Data management & quality

- Centralized, governed data with lineage/metadata and role-based access. 0–2.

Why: Centralized data governance is widely centralized in leaders and underpins trust and compliance.

- High-quality, de-duplicated, contextual data for priority AI use cases. 0–2.

- PII handling, retention, minimization, and anonymization policies documented + enforced. 0–2.

- Real-time/near real-time data feeds for AI that require fresh context. 0–2.

- Feedback data capture (human ratings, outcomes) tied back to training/evaluation. 0–2.

Tip: A readiness checklist should start with data centralization and access before tool selection.

Skilled workforce & operating model

- A CEO-sponsored program with an accountable executive owner and a budget. 0–2.

Why: CEO oversight of AI governance correlates with higher bottom-line impact.

- Role-based AI training (builders, product, risk/compliance, and frontline users). 0–2.

- Reskilling plan and targets (e.g., % workforce reskilled next 12–36 months). 0–2.

Data point: Organizations expect more reskilling over the next three years as AI scales.

- Hybrid hub-and-spoke model (central COE for governance/risk; federated delivery squads). 0–2.

Why: Risk/compliance & data governance are often centralized; talent is more hybrid.

Governance, risk & compliance (GRC)

- Adopt an AI governance standard (e.g., ISO/IEC 42001 AI Management System) with policies, controls, and audits. 0–2.

- Risk management mapped to NIST AI RMF 1.0 (Identify → Measure → Manage → Govern). 0–2.

- Model documentation (intended use, training data sources, eval methods, limitations). 0–2.

- Human-in-the-loop & review thresholds for high-impact decisions. 0–2.

- Risk register & incident response (hallucinations, IP leakage, bias, safety). 0–2.

- EU AI Act readiness (risk classification, prohibited-use checks, GPAI obligations, record-keeping, technical documentation). 0–2.

Why now: The AI Act is now in full force; GPAI obligations are applicable as of Aug 2, 2025, with full applicability starting Aug 2, 2026 (with exceptions/transition paths).

Security & privacy

- Zero-trust security posture for data, models, and agent tools; least-privilege secrets management. 0–2.

- Secure model interaction (prompt/input filtering, egress controls, red-teaming). 0–2.

- IP protection & data isolation (fine-grained access, tenant separation, no training on customer data without consent). 0–2.

- Continuous monitoring for abuse, jailbreaks, data exfiltration, and model drift. 0–2.

- Risk mitigation for inaccuracy, IP, cybersecurity, privacy; the most commonly managed gen-AI risks in 2024–2025. 0–2.

Cross-functional collaboration & adoption

- Use-case squads (Product + Ops + Data/ML + Security/Risk) with a single success metric. 0–2.

- Change story + internal communications (what, why, how; benefits; governance). 0–2.

- Embedded workflows (AI inside the tools people already use; not sidecar chat only). 0–2.

- Adoption incentives & champions (managers' role-model usage). 0–2.

- KPI tracking for adoption & ROI (active users, time-to-complete, error rates, satisfaction). 0–2.

Why: Tracking KPIs and clear roadmaps are top drivers of EBIT impact; fewer than one-third of firms do this well.

Financials, portfolio & ROI

- Portfolio strategy prioritizing workflow-level value (not “tool proliferation”). 0–2.

- Business-case templates (benefit drivers, cost-to-serve, risks, payback period). 0–2.

- Balanced investment mix (front-office + back-office automation with clear ROI). 0–2.

Signal: Many orgs over-index funding to S&M because attribution is easier; rebalance toward ops where ROI can be higher.

- Unit economics (cost per task, per record, per ticket deflected). 0–2.

- Exit/scale criteria (kill gates, readiness gates, and scaling playbooks). 0–2.

Model/agent lifecycle & quality

- Evaluation suite (accuracy, robustness, safety, bias, privacy, latency, cost) per use case; target thresholds set. 0–2.

- Red-teaming & safety testing before production; periodic re-evals. 0–2.

- Feedback loops (human ratings, outcome verification) feed continuous improvement. 0–2.

- Drift detection & rollback for prompts, models, RAG corpora, tools. 0–2.

- Content review policies proportional to risk; define % outputs reviewed (e.g., 100% for high-risk domains). 0–2.

Context: Organizations vary widely: 27% review 100% of gen-AI outputs; a similar share reviews ≤20%—codify your standard.

Scoring guide

- 0–20: Foundational. Focus on data governance, security/privacy, and CEO-level governance first.

- 21–32: Emerging. Establish KPIs/roadmap, centralize risk, and harden infra/observability.

- 33–42: Scaling. Expand workflow redesign and back-office automation for line-of-business ROI.

- 43–50+ : Leader. Industrialize evaluation, feedback, and cost discipline; pursue end-to-end domain transformations. (Rationale: Organizations that redesign workflows, track KPIs, and put senior leaders in charge of governance report higher EBIT impact.

Crafting an AI Implementation Strategy

AI readiness sets the foundation, but execution is where strategy becomes value. Organizations that translate assessment results into a structured implementation roadmap outperform those that rush to deploy isolated pilots. The key is to blend top-down alignment with bottom-up empowerment, ensuring governance and agility coexist.

1. Build alignment from the top down

AI transformation begins with leadership ownership. Companies in which the CEO or C-suite directly sponsors AI initiatives are twice as likely to achieve measurable EBIT impact.

Svitla recommends forming an AI Steering Committee that includes representatives from IT, data, operations, and compliance. This committee should:

- Define the AI vision and link it to enterprise KPIs.

- Approve an AI policy charter covering governance, ethics, and security.

- Oversee funding, risk, and compliance alignment across departments.

Pro Tip: Executive alignment should be formalized via OKRs or governance scorecards, not just vision statements.

2. Co-design with cross-functional teams

A successful AI strategy isn’t imposed; it’s co-created. Cross-functional teams double success rates compared to siloed development. This means bringing engineers, data scientists, and domain experts into multi-disciplinary “AI squads.” Each squad should:

- Start with a business problem, not a model.

- Use design thinking to re-imagine workflows.

- Prototype with measurable user and business outcomes.

Embedding Svitla’s agile delivery approach helps accelerate from pilot to production while maintaining transparency and iteration speed.

3. Adopt a hybrid governance model

Governance doesn’t have to slow innovation. The most resilient enterprises are shifting to a hub-and-spoke model, a central AI Center of Excellence (CoE) that defines standards, and distributed teams that build and innovate within those guardrails. This ensures consistency in model validation, risk management, and compliance while preserving creative autonomy.

Core components of hybrid governance:

- Centralize policy, risk, and tooling decisions in the CoE.

- Federate use-case ownership to business units.

- Maintain a shared metrics framework (e.g., adoption rate, ROI per use case, compliance score).

4. Prioritize use cases strategically

Rather than chasing hype, mature organizations sequence their AI adoption. Start with high-impact, low-risk workflows: predictive analytics, anomaly detection, or customer-service optimization, where data is already available and outcomes are measurable. Then, progress toward complex integrations like generative content automation or R&D acceleration.

5. Institutionalize feedback and continuous learning

AI implementation is a cycle, not a project. Best-in-class organizations deploy MLOps and continuous-learning frameworks that monitor accuracy, drift, and user satisfaction in real time.

Svitla’s engineering teams help clients design closed feedback loops, connecting data ingestion, model retraining, and user telemetry, so every iteration improves system intelligence.

6. Track success through clear metrics

Define metrics across three tiers:

- Outcome metrics: Cost savings, revenue lift, efficiency.

- Platform metrics: System uptime, latency, model accuracy, and drift.

- Adoption metrics: Active users, task-completion rate, satisfaction (CSAT/NPS).

These KPIs align business value with model performance, ensuring AI remains an operational engine, not a novelty.

AI implementation is both technical and cultural. Leaders must balance structure with speed, fostering an environment where responsible experimentation is rewarded, data is democratized, and outcomes are measurable. By combining top-down leadership with Svitla’s proven engineering and consulting expertise, organizations can turn readiness into sustained competitive advantage.

Measuring AI Readiness Success (2025)

AI readiness “shows up” in numbers. Treat it like product ops: define what good looks like, instrument the workflows, and review on a fixed cadence. Below is a four-layer KPI stack senior leaders can use to prove value, control risk, and guide reinvestment.

Business outcomes (prove value)

Why it matters: Boards and CFOs are scrutinizing AI spend; leaders want measurable gains, not pilots. Recent exec surveys highlight rising usage but uneven returns unless success is explicitly tracked (e.g., ROI, productivity, cost-to-serve).

Core KPIs (with formulas & targets):

- Productivity uplift (%) = (Baseline hours – Post-AI hours) / Baseline hours.

- Target: ≥10–20% in knowledge workflows where AI is embedded; some large engineering orgs report ~10%+ productivity gains as a directional benchmark.

- Cost per transaction/ticket/task = Total process cost ÷ completed volume.

- Target: 15–30% reduction in the first two quarters for high-volume processes (support, intake, AP).

- Revenue lift attributable to AI (%) = AI-impacted revenue ÷ total revenue.

- Target: Start with leading indicators (win rate, conversion, AOV) before top-line attribution stabilizes.

- Cycle time to decision (hrs/days) = Avg time from intake → decision/fulfillment.

- Target: 25–50% reduction in decision-heavy workflows (risk review, claims triage, QA).

Cadence/owners: Monthly business reviews led by Product/Ops with Finance; roll into quarterly board materials.

Adoption & change (ensure it sticks)

Why it matters: Without sustained use, ROI evaporates. Executives increasingly demand usage-based signals as leading indicators of value.

Core KPIs:

- Weekly Active Users (WAU)/Eligible Users (%) and WAU/MAU stickiness.

- Target: ≥60% WAU of eligible users by Q2; WAU/MAU ≥0.6.

- Task penetration (%) = AI-assisted executions ÷ total executions for the same task.

- Target: ≥70% for targeted tasks within 90 days of rollout.

- User satisfaction (CSAT/NPS) with AI features and time-to-first-value (TTFV).

- Target: CSAT ≥4.2/5; TTFV ≤2 weeks post-enablement.

- Change readiness index (trained users ÷ target users; completion of role-based curricula).

- Rationale: Formal role-based upskilling is a best practice in enterprise adoption programs.

Cadence/owners: Weekly adoption dashboard; monthly enablement review with HR/L&D.

Platform, data & model quality (make it reliable)

Why it matters: Responsible AI guidance stresses operationalizing governance and quality, not just principles. WEF’s 2025 playbook and NIST’s AI RMF (incl. GenAI profile) call for operational KPIs tied to reliability, security, and risk mitigation.

Platform reliability & efficiency

- Uptime (%) and p95 latency (ms) per capability (chat, RAG, agents, batch).

- Targets: ≥99.9% uptime; p95 <800 ms for interactive flows.

- Unit economics (cost per generated output / per retrieval / per solved ticket).

- Target: Down-trend ≥20% over two quarters via prompt/model optimization and caching.

- Error budget burn for AI features (SLOs vs. actual).

Data quality (ISO/IEC 25012 dimensions)

Track a minimal set across accuracy, completeness, consistency, timeliness, accessibility, and traceability; score 0–2 for each dataset powering AI features. Use ISO/IEC 25012 as the canonical model.

Example measures:

- Freshness (timeliness) = % records < X hours old.

- Completeness = % required fields populated.

- Lineage coverage (traceability) = % tables with end-to-end lineage documented.

Model/agent performance

- Task accuracy/quality (domain eval sets, golden sets).

- Hallucination rate (%) = Invalid outputs ÷ total outputs (by severity tier).

- Tool-call success rate (%) for agents; RAG faithfulness (source-grounding score).

- Drift index (distribution shift vs. baseline) and rollback MTTR.

Cadence/owners: Weekly engineering/ML ops review; monthly data governance committee.

Risk, security & compliance (control downside)

Why it matters: As adoption scales, organizations report material losses from compliance failures, flawed outputs, and security/privacy gaps unless they strengthen Responsible AI controls and track them explicitly.

Control KPIs (aligned to NIST AI RMF & sector guidance):

- Policy coverage (%) = AI use cases with mapped risks & controls ÷ total use cases.

- High-risk use cases with human-in-the-loop (%) (≥95% for regulated/impactful decisions).

- Security incidents tied to AI per quarter (target: → 0; trend and severity weighted).

- Third-party AI due diligence completion (%) and MFA/zero-trust coverage (%) mirror recent supervisory guidance in financial services.

- Bias & explainability checks (pass rate) on scheduled evaluations.

- Copyright/IP leakage events (zero tolerance; monitor egress and prompts).

- Regulatory readiness score (EU AI Act mapping, audit trails, documentation completeness). (If you’re adopting ISO/IEC or WEF playbook controls, reflect them here.)

Cadence/owners: Monthly risk committee; quarterly audit readiness review (Legal, CISO, Compliance).

Executive dashboard: What to show every quarter

- Value: Productivity uplift, cost-to-serve trend, top three AI features by ROI.

- Adoption: WAU/MAU, task penetration, TTFV, satisfaction.

- Reliability: Uptime, p95 latency, unit economics trend.

- Data & Models: Data quality scorecard (ISO 25012 dims), hallucination/drift, agent tool-call success.

- Risk & Compliance: Policy coverage, incidents, third-party due diligence, review rates, EU AI Act readiness.

Review cadence & gates

- Weekly: Adoption & platform KPIs; incident watchlist.

- Monthly: Executive “AI Value & Risk” review (Product, Finance, CISO, Data Gov).

- Quarterly: Scale/Kill gates by use case: continue, pivot, or retire based on ROI, reliability, and risk scores (thresholds pre-agreed).

Benchmarking notes (what “good” resembles in 2025)

- Org-wide: Usage-backed value stories win budget; usage is now a primary health signal for enterprise AI tools.

- Data: Organizations that explicitly manage ISO-style data quality dimensions ship more reliable features and reduce remediation toil.

- Risk: Firms with mature Responsible AI frameworks report better cost savings and employee satisfaction—and fewer loss events.

- Governance: Translate principles into operational controls and playbooks (WEF 2025).

As organizations race to adopt AI, the differentiator isn’t models. It’s readiness. Your ability to align leadership, data, and tech infrastructure, workforce capabilities, governance, and risk management determines whether AI becomes a strategic multiplier or a sideline experiment.

The checklist provided here offers a clear diagnostic of your current maturity, from foundational (0–20) to leader (43+). But a score alone isn’t enough: the winning path involves intentional strategy, disciplined metrics, and continuous learning

If you’re ready to move from “pilot” to “production,” from “proof of concept” to “powering core operations,” that case becomes strategic. At Svitla Systems, we partner with you to:

- Navigate the eight pillars of AI readiness.

- Embed change, data, and governance at every level.

- Deliver measurable business outcomes: faster, safer, smarter.

Let’s explore the next horizon of intelligent operations together. Reach out when you’re ready to examine where you stand, what it takes to bridge the gap, and how we can accelerate your transformation.