Analytics sits at the core of the capital markets industry. Yet, its quality, freshness, and accuracy are being increasingly questioned. Many of the data analytics systems used by front-line employees are not user-friendly and still require manual efforts for data input and modeling. Records often end up being incomplete as internal and external data sources are hard to merge due to interoperability issues.

For the above reasons, investment companies are actively exploring new use cases for advanced analytics technologies — Big Data analytics, machine learning (ML) models, and artificial intelligence (AI).

Globally, banking and investment service companies plan to spend over $652 billion on IT services this year, with data and analytics programs getting the most funding, alongside cybersecurity and cloud computing.

A strong business case starts with understanding the capabilities and constraints of the available solutions. Let’s take a look at how market players leverage data analytics in investment banking to improve risk management, team productivity, and revenue growth. We’ll also examine the common challenges of deploying advanced analytics solutions in finance, paired with possible solutions.

Use Cases of Data Analytics in Investment Banking

Data is a crude asset — it’s the application of analytics that makes it valuable. There are six major areas where advanced analytics solutions can create substantial value in investment banking.

Risk Management

Times and again history proves the importance of risk management in the financial sector. Be it liquidity risks (which tanked SVB Financial Group) or poor credit management practices (which triggered Archegos Capital collapse and later affected Credit Suisse). Fraud, cybersecurity attacks, geopolitics, new regulations — financial institutions face a constantly expanding risk radar.

The ability to predict and anticipate risks is therefore critical for the capital market players. Yet, 70% of banking industry professionals deal with time-consuming and costly manual risk processes.

Machine learning models have already shown great potential for large-scale data analysis for the banking industry at large. In the capital markets, such algorithms can be trained on transactional data to:

- Identify causal relationships between different events and the network risks they present

- Model different company performance scenarios under stress conditions

- Predict the likelihood of loan defaults and liquidity issues

Barclays, for example, relies on agent-based modeling technology for risk management, provided by its technology partner Simudyne. Simudyne’s software allows modeling advanced what-if scenarios to inform the team about possible credit risk, market risk, and operational risk modeling for the bank’s performance in challenging economic conditions. In the future, Barclays also wants to extend predictive modeling to support stress testing scenarios to ensure optimal liquidity levels for withstanding economic shocks.

Separately, Barclays also developed a proprietary backtesting methodology to estimate its potential future exposure to counterparty credit risks. The model uses historical price data to validate the efficacy of existing risk factor models by quantifying all maximum expected lifetime credit exposure under pre-determined probabilities.

The new model helps Barclay’s stay compliant with the Basel III framework. It also helped reduce the bank’s exposure to uncovered high-risk positions within a small probability such as 0.01-0.05%.

Fraud Prevention

Securities fraud is a criminal offense, yet high-profile investor defraudment cases regularly come up in the news headlines. Worryingly, 69% of global executives and risk professionals expect financial crime risks to increase over the next 12 months, according to a Kroll report.

Emerging technologies like big data analytics and machine learning can act as a force multiplier, allowing organizations to improve the completeness, accuracy, and fidelity of anti-fraud assessments. Further, such solutions can assist in identifying previously unknown risks and malicious behavior patterns.

In fact, the European Financial Action Task Force (FATF) actively encourages organizations to explore new technologies for anti-money laundering and anti-terrorist financing (AML/CFT), namely machine learning and data analytics:

“[Machine learning and AI-based] tools can partially or fully automate the process of risk analysis, allowing it to take account of a greater volume of data, and to identify emerging risks which do not correspond to already-understood profiles. Such tools can also offer an alternative means of identifying risks – in effect acting as a semi-independent check on the conclusions of traditional risk analysis”

The US Anti-Money Laundering Act (AMLA) of 2020 also supports the usage of innovative approaches to AML, such as machine learning to reinforce FIs’ and Financial Crimes Enforcement Network’s (FinCEN) crime detection capabilities.

And many banks already do just that. CitiBank recently partnered with IBM to create an advanced analytics system for performing corporate audits. Using IBM Cloud Pak for Data and IBM Open Pages with Watson technology, CitiBank created a set of new tools for analyzing and scoring the control definitions; processing transcripts between agents and customers, and assisting auditors with content look-up across hundreds of pages of audit manuals. With a new set of self-service tools, the team can deliver more precise reporting to regulators at a faster rate.

Advanced analytics models also extend the company’s monitoring capabilities. Operating in the background, fraud detection algorithms can flag suspicious trading transactions and portfolios that continuously incur losses. Then alert human analysis to initiate further investigation. A group of researchers also tested a machine learning algorithm for predicting the likelihood of committing financial fraud among investment advisors in Canada. Trained on a dataset of historical fraud cases, the model identified a set of factors, indicative of potential fraud with 99.5% accuracy rates.

Cybersecurity

The financial sector is a prime target for cyber-criminals. Last year, the UK finance industry prevented over £1.2 billion of unauthorized fraud from landing with cyber-criminals, although a substantial amount of client funds have been lost too.

Apart from external cyber risks, financial organizations also face internal threats. Suboptimal cybersecurity security policies, paired with the inevitable employee missteps can result in costly data breaches.

With an expanded technical portfolio, however, standard cybersecurity monitoring practices and rule-based detection systems often fall short. High rates of false positives result in an alert storm for the often understaffed cybersecurity teams. Lack of visibility into certain digital systems or channels increases potential risk exposure.

Big data technologies help consolidate all the security signals and then use this intel to fine-tune threat detection and prevention practices. At Goldman Sachs, cybersecurity is powered by a Hadoop cluster, which aggregates security content (web logs, firewall data, network packets, etc) from the entire organization. Different analytics techniques then get applied for cyber risk detection, according to Don Duet, the company’s CTO. Such a setup allows Goldman Sachs to maintain 360-degree visibility within its corporate perimeter and detect any signs of cyber threats at the onset.

Portfolio Construction and Optimization

Automated investment management services have been around since the late 1990s, but the sectors’ growth really took off in the early 2010s, when the first digital-native robo-advisors like Betterment and Wealthfront emerged in the US market.

Traditional capital market players soon caught up with the demand for more accessible, personalized investment services. In 2015, Vanguard launched a Personal Advisor Services (PAS) to help clients automatically construct better portfolios. The tool uses Monte Carlo simulations to evaluate different potential financial outcomes for the client to determine the best investment strategy and recommend approaches to portfolio rebalancing and tax loss harvesting.

Deutsche Bank, in turn, launched a α-DIG in 2018 — a web application for evaluating the companies’ Environmental, Social, and Governance (ESG) performance. The tool uses natural language processing (NLP) to scan the company’s annual reports for relevant information and uses data analytics to analyze open data for the web to provide conclusive reports.

Apart from offering self-service experience to clients, data analytics in investment management can also empower your advisors with better intelligence for decision-making.

Morgan Stanley’s WealthDesk platform, used by the company’s 16,000+ registered investment advisors, integrates a “next-best action” machine learning system. The algorithmic engine helps agents match investment possibilities to client preferences and recent life events. For example, if the client recently had a new child, the system may suggest looking into different college funds. In addition, the system alerts about any significant changes in the client’s portfolio, low-cash balances, and margin calls, helping advisors take more timely action.

Quantitative Investment

Quantitative investing relies on advanced mathematical models (and more recently — AI algorithms) to help investors make more profitable decisions. While traditional quant models start with human intuition, algorithmic solutions try to derive meaning from real-world data.

Effectively, such models can be trained to zoom in on specific market signals — the previously undiscovered or overlooked alpha factors. In other words: ML algorithms help quant investors discover new drivers of returns in the virtual haystack of open web data. Last year proved the efficacy of quant approaches.

Quantitative investment strategies brought a 3.9% return last year, while the S&P 500 was down by 19%, and the World Government Bond Index was down by 17%.

At present, nine out of ten hedge fund traders already use AI and ML technologies at least in some capacity. WATS® Equity Electronic Trading system by Well Fargo, for example, uses machine learning to provide multi-factor analytics, which combines macro, micro, and order-level profiling for performed trades. The bank also built a separate machine learning model for automatic portfolio assessments to gain better insights into the differential performance factors.

Man AHL has been using trading machine learning-based systems since early 2014. The hedge fund’s large-scale models process over 2.5 billion ticks of data per day to draw interference from and better model order books.

AI-based pure-play hedge funds are also demonstrating strong performance. Companies like Aidyia Holdings, Cerebellum Capital, and Numerai rely exclusively on AI/ML-generated insights to inform their investment strategies. Numerai has consistently outperformed neutral indexes and traditional quant funds over the past four years.

Robeco, a Dutch asset management firm, in turn, plans to use machine learning tools for quantitative credit. Their goal: Improve risk-adjusted returns for strategies that use the value factor. Early tests have shown that the machine learning-based approach offered more accurate risk estimates than traditional methods.

Investor Sentiment Analysis

On a daily basis, capital market firms model and predict how the changes in economic conditions, monetary policies, and customer sentiment will impact their investment outcomes and risk vulnerability. One offhand Tweet from a CEO can make the stock go down one day and soar the other. While meme stocks, hyped on social media, can cause greater market volatility.

Over the years, companies have tested a variety of analytical models for investor sentiment analysis, ranging from rule-based sentiment analyzers and NLP to deep neural networks. Such algorithms now power some of the best investment intelligence tools including Bloomberg Terminal, Eikon by Refinitive, S&P Capital IQ Platform, and AlphaSense among others.

Bloomberg, for example, extensively relies on a combination of traditional rules-based programming and machine learning models. The company uses ML to extract useful insights from millions of legal briefs, contracts, court opinions, and dockets to provide customers with in-depth insights. Thanks to this, the time from when a document is published to when it's available to clients has reduced from months to minutes.

Refinitiv, in turn, developed proprietary MarketPsych Analytics sentiment scores, which are based on aggregated net positive versus negative references about the tracked companies, collected from online resources (social media, news websites). The system scans for new references across thousands of assets in a matter of moments to create time series predictions with high accuracy.

Challenges of Using Data Analytics in Investment Management

Although the capital markets sector has torrents of available data, most organizations lack the tools to effectively process it. In fact, 75% of commercial and corporate banks are still at the experimentation stage of advanced analytics.

Generally, data analytics initiatives are slow to progress for several reasons:

Data Veracity

One of the four ‘Vs’ of big data analytics is veracity — a high degree of uncertainty about the quality of that data and its availability. There’s always the question of whether the available data provides an adequate representation of the selected analytics use case. For example, credit scoring models have been found to exhibit bias against lower-income families and minority borrowers. The underlying reason was that people in these categories had more misleading data in their credit scores, which were used for model training.

The aggregation of multiple datasets is one solution to the above issue. However, research shows that datasets collected under heterogeneous conditions (i.e. different populations, regimes, or sampling methods) can improve the analytics’ model performance, but are also prone to confounding, sampling selection, and cross-population biases.

At the same time, not all financial data is accessible for analysis due to regulatory requirements. Global privacy laws restrict the usage of personally identifiable information (PII) in analytical models.

The new subset of Privacy Enhancing Technologies (PETs) such as differential privacy, federated analysis, homomorphic encryption, and secure multi-party computation attempt to address those shortcomings.

Societe Generale (SG), for example, recently launched a DANIE project — a cross-industry effort to develop distributed reconciliation capability to securely and anonymously cross-validate financial datasets among the project participants to ensure higher data integrity.

The usage of synthetic data — machine-generated data sets — is another approach to overcoming data limitations. Synthetic datasets provide sufficient context for effective model training without exposing any private information. JP Morgan developed several synthetic data sets, representing AML behaviors, sample customer journeys, and market execution. These are now helping the bank train models for use cases, where human-generated data is limited or restricted.

Complex Data Landscape

The two other important Vs of big data are volume and velocity. New data gets generated in tremendous quantities at blazing speed. The problem, however, is its operationalization. Traditional relational databases, used for analytics, cannot cope with the load. Data extraction, transformation, and loading (ETL) processes are slow and/or expensive — so is data querying.

Moreover, large data volumes require greater computing capacities, prompting companies to adopt cloud databases and analytics platforms in place of on-premises ones. Such decisions often trigger wider transformations in data management architectures. The good news is that because cloud computing has become prolific in the financial sector, you no longer need to start from the ground up.

Instead, you can leverage existing data management architectures and best practices to create a staunch technical base for your program. In fact, Goldman Sachs recently released an open-source version of their open-source data management platform, nicknamed Legend. The purpose of Legend is to provide a “shared language” for the derivatives market to improve data sharing and interoperability between different systems and it's already being used by data engineers globally.

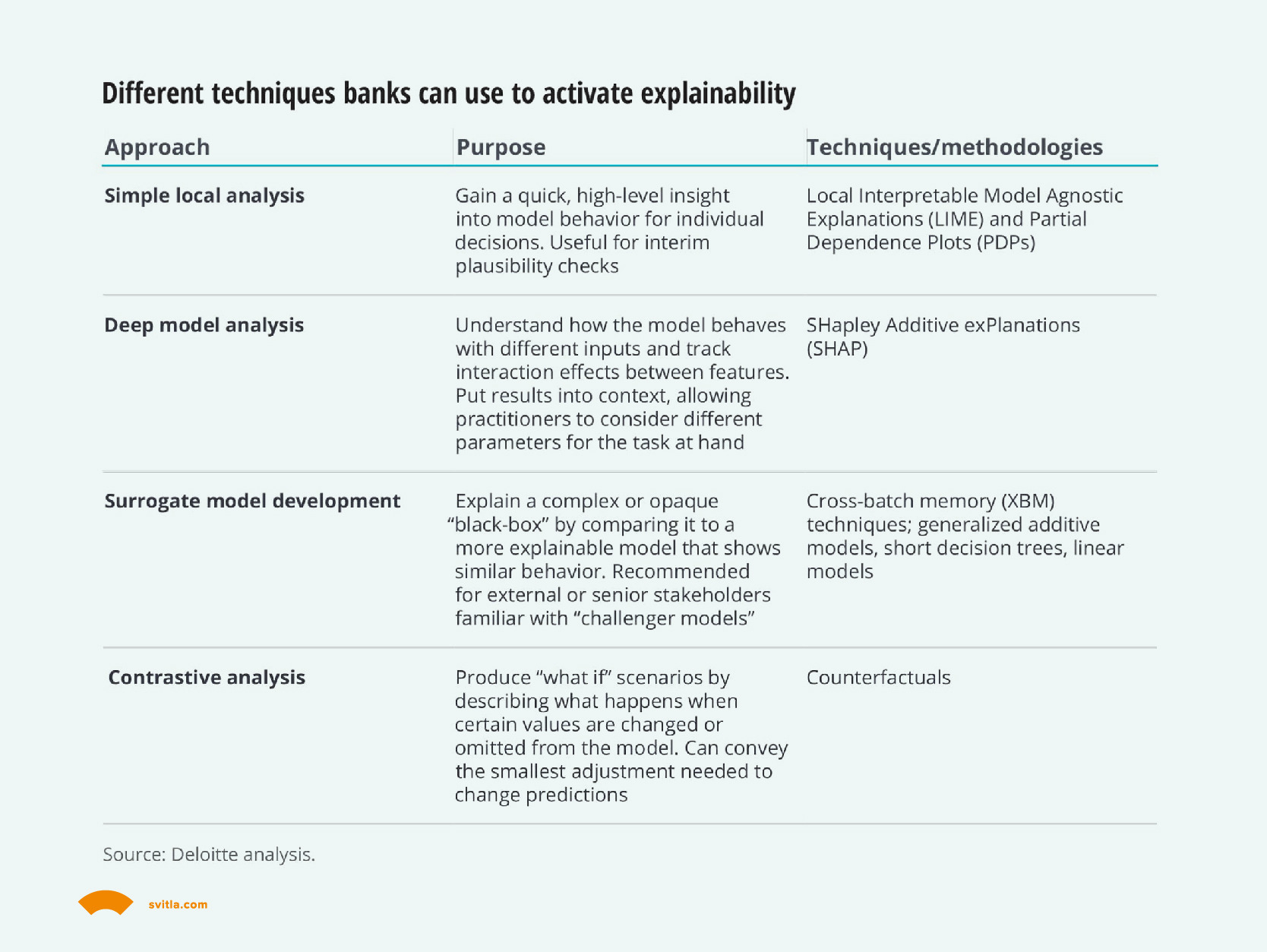

Explainability

ML and AI models often operate as “black boxes”, meaning that no one knows their exact method for generating results. In many financial services use cases, this factor raises regulatory challenges in terms of transparency and auditing. Lack of explainability restricts supervisors from analyzing the analytical process and thus approving its usage. Market players, in turn, are reluctant to fully disclose their models’ performance characteristics to protect intellectual property.

In response, a new domain of explainable AI (XAI) models has emerged:

XAI techniques can help understand which variables impact the model predictions and which steps the models took to reach a decision to reassure regulators and consumers alike of the model’s trustworthiness.

Bloomberg, for example, plans to release empirical metrics for its liquidity models to improve their explainability. The feature may become accessible on the Bloomberg Terminal liquidity screens by the end of 2024.

At Svitla Systems, we have successfully used a combination of Local Interpretable Model-agnostic Explanations (LIME) and SHapley Additive exPlanations (SHAP) explainability methods to validate the performance of a sentiment analysis model.

Model Robustness and Validation

A flurry of big data analytics pilots is underway. Yet, as much as 87% of data science projects are never deployed in production.

The most common reason for shelved data analytics projects in finance is lack of data, which results in poor model performance during validation. The model shows high-accuracy results in training but then fails to maintain the same bar on a new (validation) dataset.

During the model validation stage, it’s important to test all model components — input, processing, and reporting — to prevent any performance issues or biases. Ongoing model monitoring should also be in place to avoid concept drifts.

In fact, various regulatory frameworks require financial companies to implement proper internal analytical model validation and performance monitoring practices, such as the European Central Bank annual validation reporting requirement or the Basel Committee’s standards.

An experienced machine learning development partner should help you navigate the risk management and compliance requirements of deploying ML models into production.

Conclusion

From lower market and cyber-risk exposure to new revenue enablement, big data holds big promises for the investment banking industry. But to capture the benefits, organizations must address the adoption blockers — limited data access, suboptimal data infrastructure, and complex regulatory requirements.

Implemented once, an effective data management architecture will act as a backbone for multiple data analytics use cases, supplying your teams with real-time, high-fidelity insights. Svitla Systems consultants would be delighted to further assist you with building a strong business case for data-driven transformations. Contact us in the USA, Canada, Poland, Mexico, and Ukraine.